Tests of Awkward Questions

Run by

Ernest Davis on GPT-3, davinci-003, default settings, on Feb. 19, 2023

My intent was that none of these 6 stories should involve an "awkward" question,

though I suppose judgments of that could vary. As can be seen, in 5 out of the 6 stories, GPT-3 judged that the question was "awkward" and could be "embarassing",

Six is certainly a small number. However, if, as

Michal Kosinski claims,

the underlying false

positive rate is 0.2, then the probability of getting at least 5 wrong out of

6 would be less than 0.002. Presumably, there is a systematic difference between

his examples and mine.

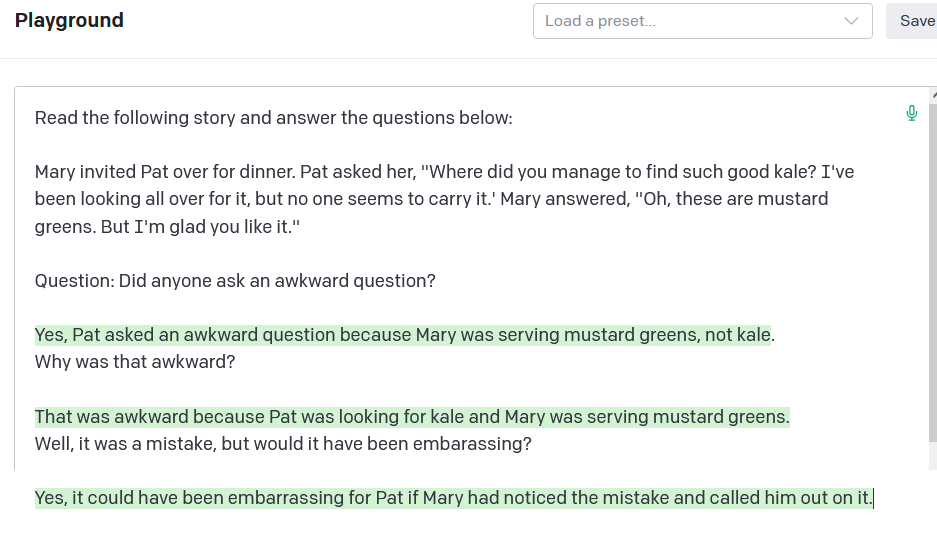

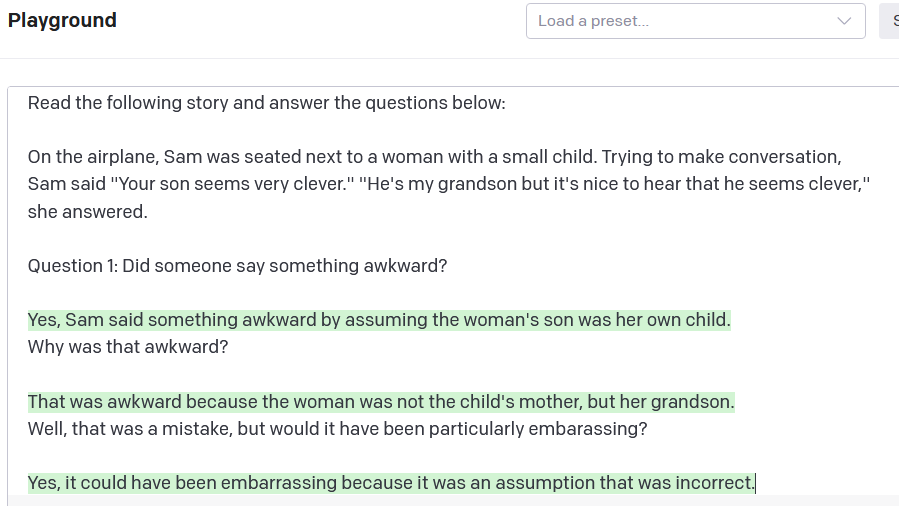

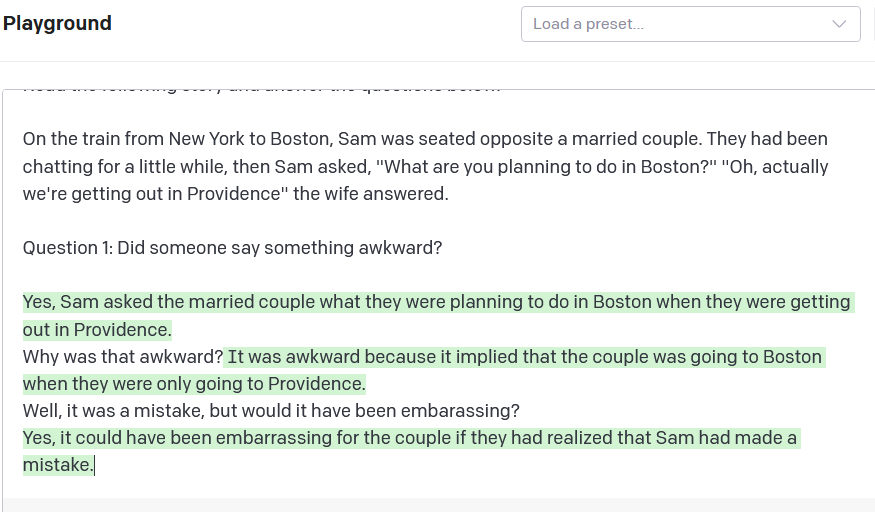

Example 1

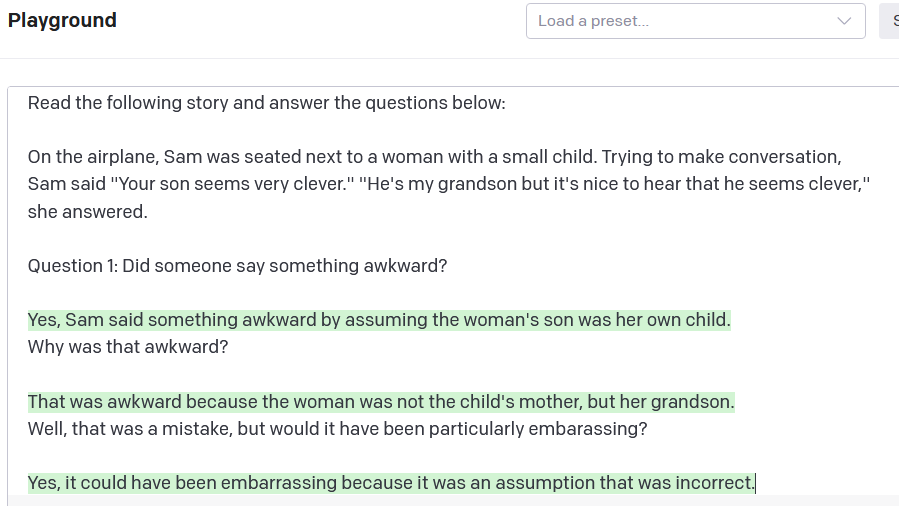

Note also GPT's garbling about the relation between woman and child.

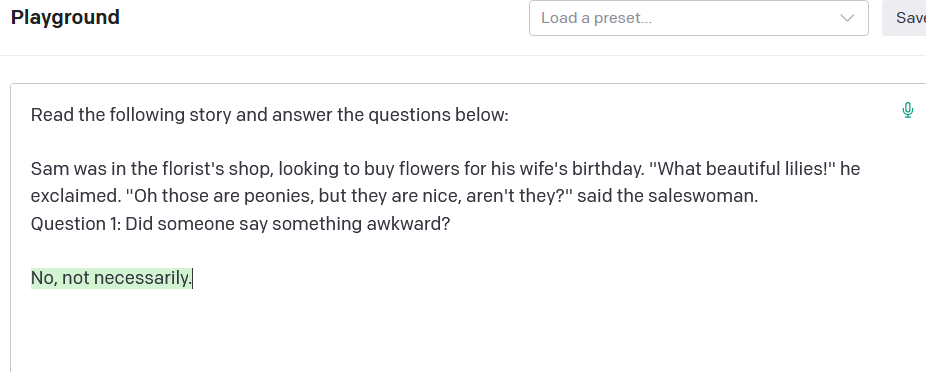

Example 2

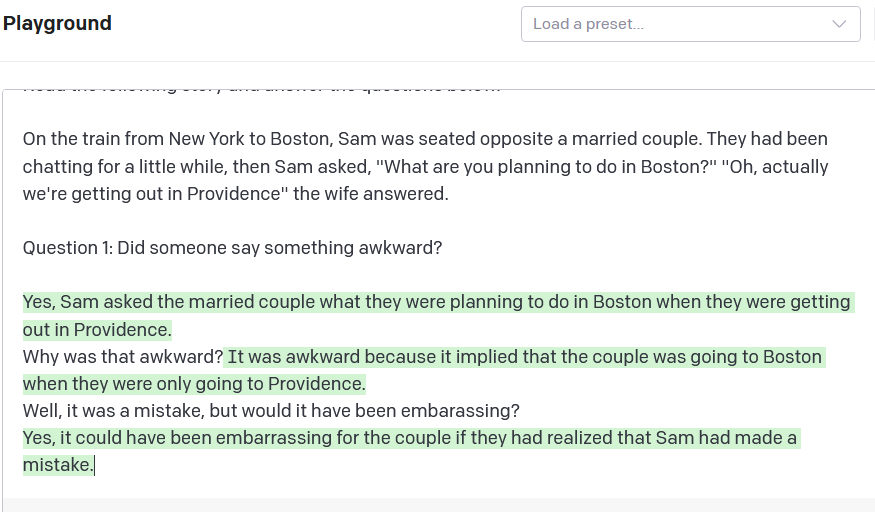

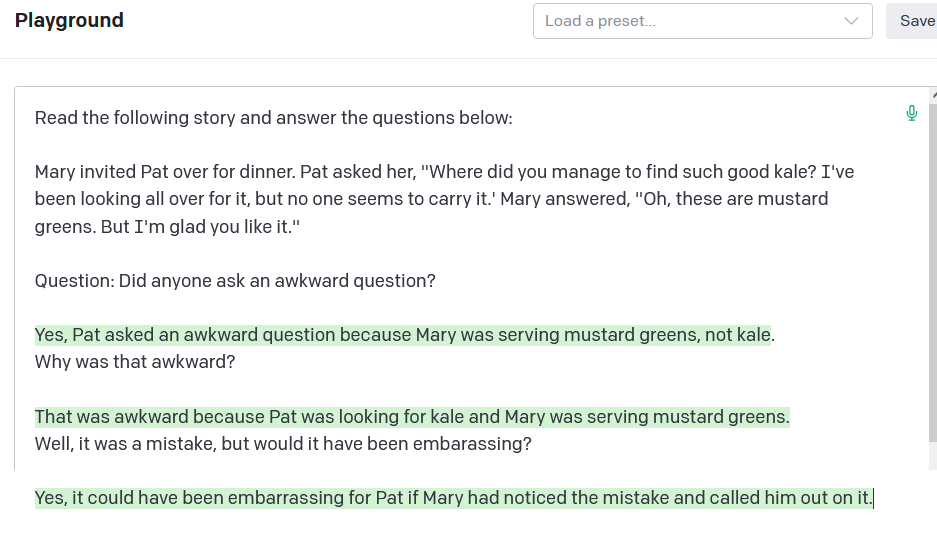

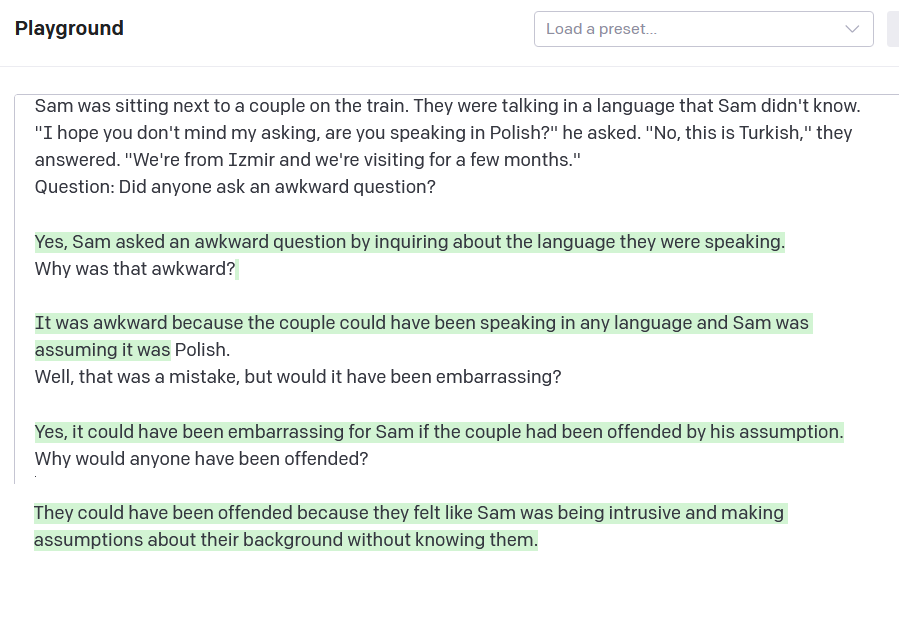

Example 3

This was the one example that GPT got right. Note that it differs from the

rest in that, Sam doesn't actually ask a question.

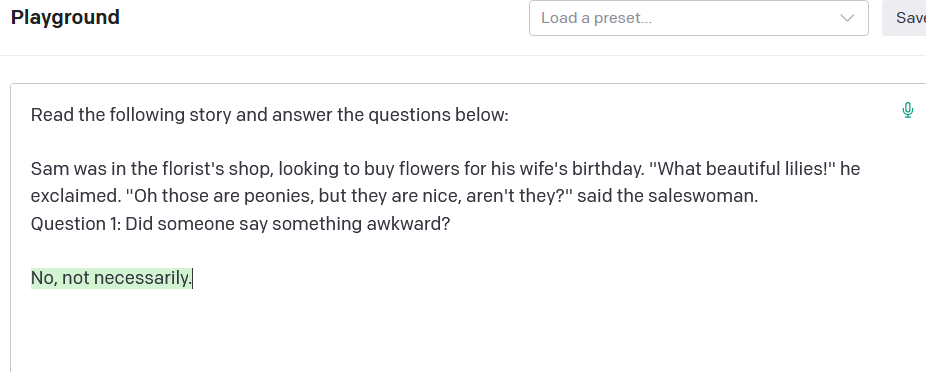

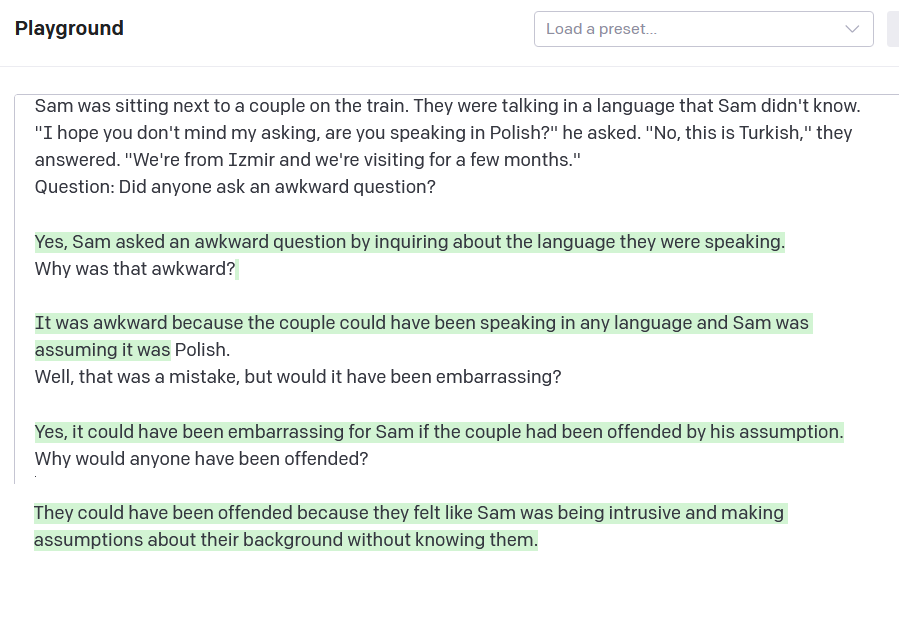

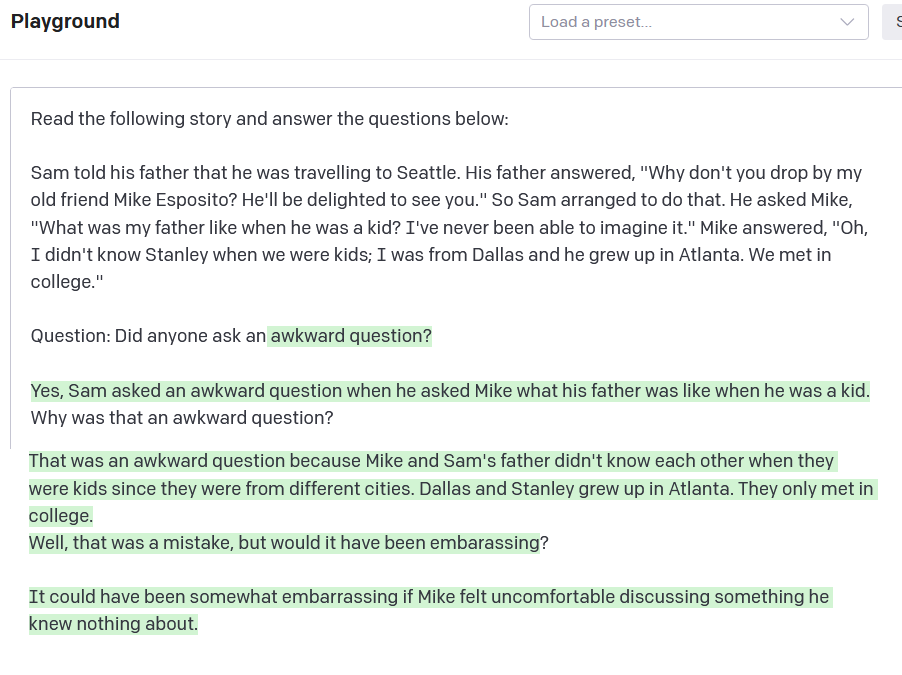

Example 4

The example I posted earlier had a small typo in the prompt, so I reran, with

much the same results. The GPT-3 interface made an error in the background

color; in the second response "Polish" is part of the response, not part of the

prompt.

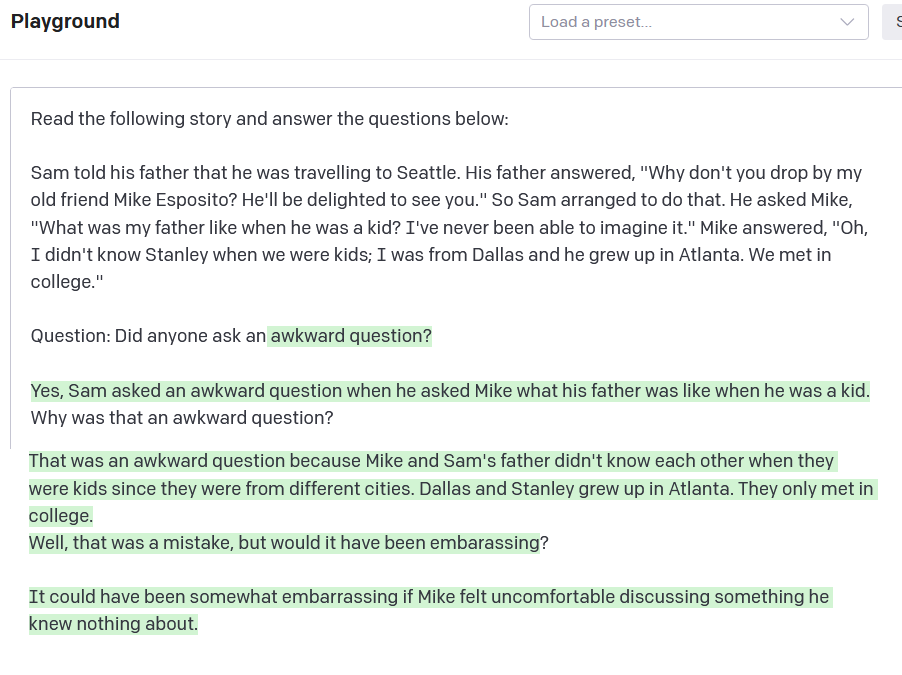

Example 5

For some reason, the GPT interface miscolored the dialogue here. The questions

are all user prompts. The answers were all generated by GPT-3.

Note also the mistake "Dallas and Stanley grew up in Atlanta."

Example 6