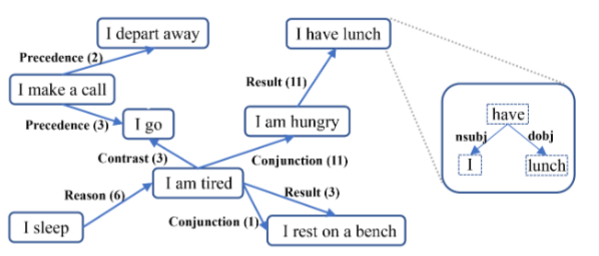

Description: A large-scale eventuality knowledge graph.

Example:

Size: 194 million "eventualities," 64 million relations, 15 types of relations.

Paper

GitHub

Created using: Text mining.

********************************************************

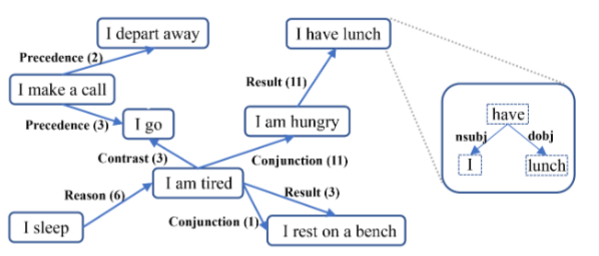

Description: ATOMIC 2020 is a commonsense knowledge graph with 1.33M everyday inferential knowledge tuples about entities and events. ATOMIC 2020 represents a large-scale common sense repository of textual descriptions that encode both the social and the physical aspects of common human everyday experiences, collected with the aim of being complementary to commonsense knowledge encoded in current language models. ATOMIC 2020 introduces 23 commonsense relations types. They can be broadly classified into three categorical types: 9 commonsense relations of social-interaction, 7 physical-entity commonsense relations, and 7 event-centered commonsense relations concerning situations surrounding a given event of interest.

Size: 1.33M knowledge tuples.

Paper

Variant: ATOMIC10x produced by knowledge distillation from GPT-3. Paper

Example:

********************************************************

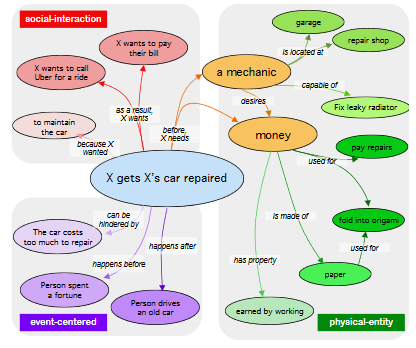

Description: A collection of triples < Subject; Predicate; Object > annotated with "Sufficiency", Necessity" and "Salience".

Examples:

Size: 30,809 triples.

Paper

No URL given for dataset

Created using: Expert annotation.

********************************************************

Description: COGBASE is a "statistics- and machine-learning-friendly, noise-resistant, nuanced knowledge core for cross-domain commonsense, lexical, affective, and social reasoning." It is "comprised of a formalism, a 10-million item, 2.7 million- concept knowledge base, and a large set of reasoning algorithms."

Example: It's not clear to me what an example in COGBASE would consist of. "COGBASE stores information at an intermediate level of abstraction (between symbols and connectionist networks). Knowledge is dynamically generated, based on the needs and restrictions of a particular context, through the combination of multiple ‘bits’ or ‘atoms’ of information. Atoms take the form of [concept, semantic primitive, concept] triples connected to one another within a graph database (presently Neo4)."

********************************************************

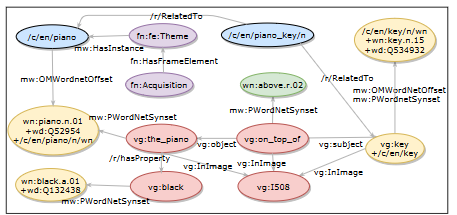

Description: A consolidated knowledge graph.

Created using: Combining seven online resources (ConceptNet, WordNet, etc.)

Size: 4.7M nodes, 17.2M edges.

Example:

********************************************************

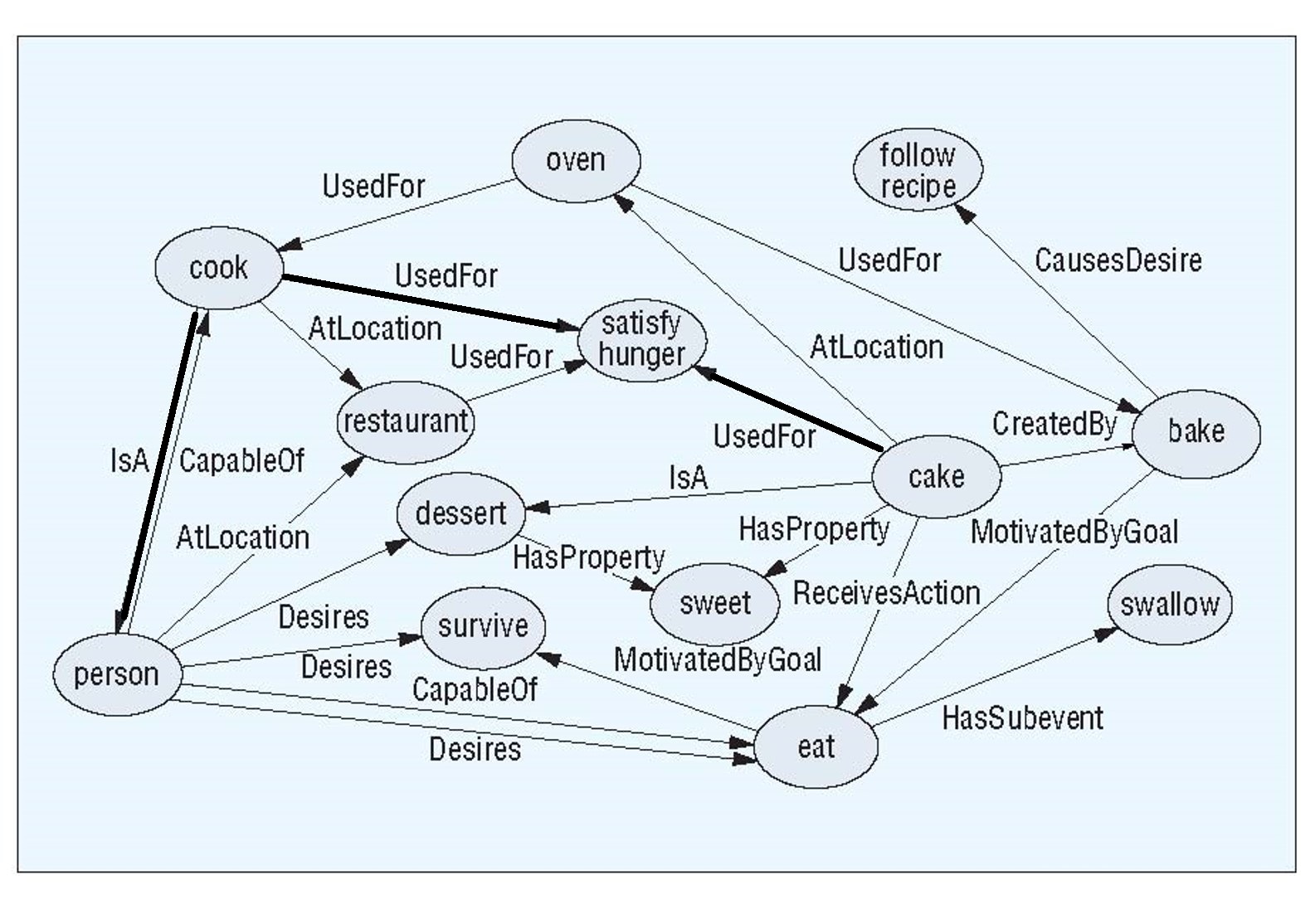

Description: Semantic network for commonsense information.

Size: 8 million nodes, 21 million edges. There are versions with

more than 10,000 nodes in 83 languages.

Created using Primarily crowdsourcing, though many other sources.

Other languages: Versions of ConceptNet with more than 10,000

nodes exist in 83 languages.

Examples:

********************************************************

Description: CYC is a compendium of commonsense knowledge, written in an

variant of higher-order logic, with over 1000 inference procedures.

Size: The ontology has a million items, not including proper nouns. There are 25 million assertions.

Example

(From a representation of Romeo and Juliet):

********************************************************

Description: An example consists of an event phrase together with the

associated the actor's intent, the actor's reaction, and other people's reactions.

Examples:

Size: 25,000 event phrases

********************************************************

Description: Pairs of objects with human-annotated scores indicating confidences that a certain pair of objects are

commonly located near in real life.

Examples: Size: 500 pairs ofobjects.

********************************************************

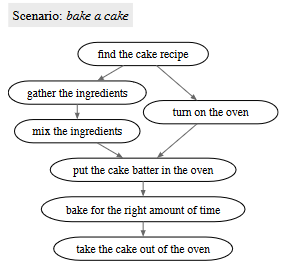

Description: A collection of partially ordered scripts.

Size: 6414 partially ordered scripts.

Created using: Crowdsourcing.

Example:

********************************************************

Example:

********************************************************

Description:

Visual Comet is a large-scale repository of Visual Commonsense Graphs that consists of over 1.4 million textual descriptions of visual commonsense inferences carefully annotated over a diverse set of 60,000 images, each paired with short video summaries of before and after. In addition, we provide person-grounding (i.e., co-reference links) between people appearing in the image and people mentioned in the textual commonsense descriptions.

Size: 60K images, 1.4M textual descriptions.

Created using: Crowdsourcing with extensive automated postprocessing.

Example:

********************************************************

Description: A large commonsense knowledge base, including

object properties, comparative knowledge, part-whole, and

activity frames.

Examples:

mountain: a land mass that projects well above its surroundings; higher than a hill.

Size: 2 million concepts, 18 million assertions.

Paper

Web site

Created using: "Generated automatically from input sources."

Commonsense Knowledge Graph (CSKG)

Paper

Github

ConceptNet

Paper

Web site

CYC

Papers:

Web site

Note: CYC is not publicly available.

(ist

(MTDimFn

(TemporalExtenFn RomeoBuysPosionFromTheApothecary

(desires ApothecaryInRomeoAndJuliet

(relation NotExistsInstance agentPunished Execution-Judicial

ApothecaryInRomeoAndJuliet))))

Event2Mind

Event X's intent X's reaction Y's reaction

X cooks Thanksgiving dinner to impress their family

tired, a sense of belonging

impressed

X drags X's feet to avoid doing things

lazy, bord frustrated, impatient

X reads Y's diary to be nosey, know secrets

guilty, curious angry, violated, betrayed

Paper

Github

Created using: Crowd sourcing

LocatedNear: Symbolic benchmark

horse wagon 1

stone road 1

room wall 1

bed dish 0

horse country 0

son queen 0

pie mud 0

Paper:

Github

Created using: The word pairs are gotten using text mining. The annotations

are created by crowd-workers.

proScript: Partially Ordered Scripts Generation via Pre-trained Language Models

Paper

Dataset is not yet publicly available.

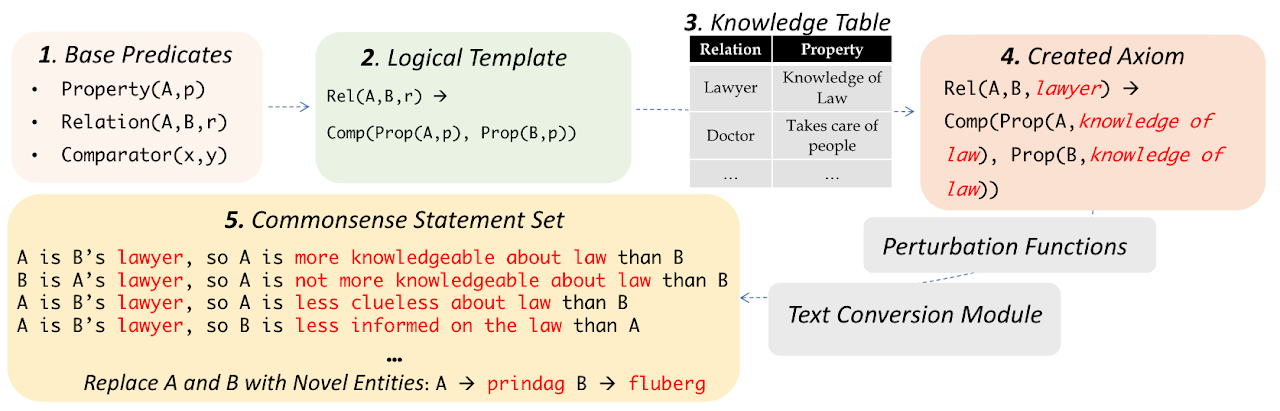

RICA: Evaluating Robust Inference Capabilities Based on Commonsense Axioms

RICA is a logically-grounded inference challenge with focus on the ability to make robust commonsense inferences despite textual perturbations. RICA consists of a set of natural language statements in the "premise-conclusion" format that require reasoning using latent (implicit) commonsense relationships. We formulate these abstract commonsense relations between entities in first-order logic and refer to them as commonsense axioms.

Size: 257K commonsense statements. 43K axioms. 1.6KProbes. 10K sentences generated.

Website

(Link is now dead.)

Paper

Visual Comet

Paper

Web site

WebChild 2.0

Physical

Properties large, high, heavy, cold, hard ...

Abstract

Properties elegant, old, safe, holy, risky ...

Comparables mountain, hill; mountain, mound; mountain, high hill; valley, mountain ...

Has physical

parts mountain peak, mountainside, slope, tableland, hill ...

Has

substance mixture, metallic element, material, page, wood ...

In spatial

proximity coast, tunnel, lake, sea, river ...

Activities climb mountain; cross mountain; move mountain; see mountain; ascend mountain