Image Retrieval with Multiple Regions-of-Interest

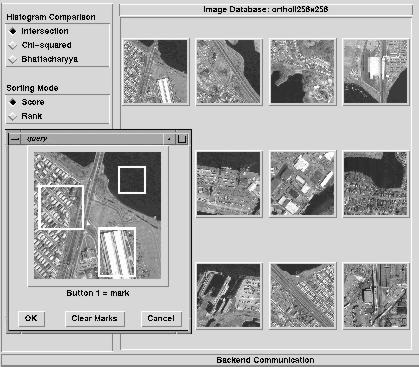

The goal of this project has been to develop a new image retrieval system based on the principle that it is the user who is most qualified to specify the "content" in an image and not the computer. Therefore the user is asked to provide salient ROIs or "regions-of-interest" and their spatial arrangements in the query image. This technique leads to more acceptable matches returned by the search engine and therefore a more powerful image retrieval tool.

Abbreviations: QBIC: Query-by-Image-Content, ROI: Region-of-Interest

Background and objectives: Most current "query-by-image-content" database indexing and retrieval systems rely on global image characteristics such as color and texture histograms. While these simple descriptors are fast and often do succeed in capturing a vague essence of the user's query, they more often fail due to the lack of higher-level knowledge about what exactly was of interest to the user in the query image - ie. the user-defined content. The goal of this project was to develop and test a new technique in image retrieval using local image representations, grouping them into multiple user-specificed "regions-of-interest" while preserving their relative spatial relationships in order to build a more powerful search engine for various applications of image database retrieval.

Technical discussion:

Image retrieval in general is based on two key components: a set of

image features (like color or texture attributes) and a similarity

metric (used to compare images). To date most systems use global color

histograms to represent the color composition of an image, thus

ignoring the spatial layout of color in the query image. Likewise, a

single global vector (or histogram) of texture measures (usually

computed as the output of a set of linear filters at multiple scales)

is used to represent non-color image attributes (such as coarseness,

etc.) The similarity metric used to compute the degree-of-match

between two images is typically a Euclidean norm on the

difference between two such global color/texture

representations.

In contrast, our system divides the image into an array of 16-by-16

pixel blocks each of which contains the following feature

representations: a joint color histogram in LUV color space and joint

3D histogram consisting of the edge magnitude, Laplacian and dominant

edge orientation, computed at two octave scales. These non-parametric

densities represent local color and texture and due to the

additive property of histograms can be easily combined to form bigger

image blocks all the way up to the entire image at which point they

become complete global representations. When the user specifies a

region of interest, its underlying blocks are "pooled" to represent a

"meta-block" to be searched for in the database. Multiple regions are

likewise searched and the intersection of the best matches determines

the final similarity ranking of images in the database. In addition,

the user has the option of specifying whether multiple selected

regions should maintain their respective spatial arrangement.

Collaboration: This project was aided by interns from New York University and Carnegie Mellon University.

Future Directions: Currently the search for ROIs is computationally intensive and pruning strategies should be implemented in order to avoid searching the entire database for a "meta-block" query. Nevertheless, this region-based image retrieval approach should prove useful not only for general image databases, but specifically for medical applications where both appearance and spatial factors play a significant diagnostic role.

Authors:

Baback Moghaddam

Information Technology Center America

Electric Research Laboratory