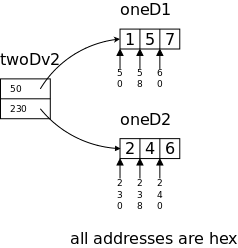

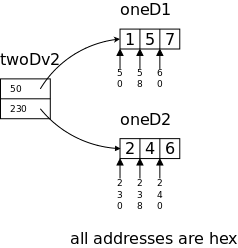

long oneD1[3] = {1, 5, 7},

oneD2[3] = {2, 4, 6};

*twoDv2[2] = {oneD1, oneD2};

// = {&oneD1[0], oneD2[0]

Start Lecture #01

I start at 0 so that when we get to chapter 1, the numbering will agree with the text.

There is a web site for the course. You can find it from my home page, which is listed above, or from the department's home page.

Start Lecture #01marker above can be thought of as

End Lecture #00.

The course has several texts.

https://diveintosystems.cs.swarthmore.edu/x86_64/antora/diveintosystems/betaThe material on C is standard, but the order of presentation is not. A difference between this book and K&R is that the latter starts with low-level I/O (read and print one character) whereas this book starts with a higher level approach.

Computer Organizationportion of the course. It is required.

Grades are based on the labs and exams; the weighting will be

approximately

20%*LabAverage + 35%*MidtermExam + 45%*FinalExam (but see homeworks

below).

I make a distinction between homeworks and labs.

Labs are

Homeworks are

Homeworks are numbered by the class in which they are assigned. So any homework given today becomes part of homework #1. Even if I do not give homework today, any homework assigned next class would become homework #2. In general the homework present in the notes for lecture #n is homework #n.

You may develop (i.e., write and test) lab assignments on any system you wish, e.g., your laptop. However, ...

NYU Brightspace.

This will be covered in the recitations.

I feel it is important for CS students to be familiar with basic

client-server computing (related to cloud computing

) in which

one develops software on a client machine (for us, most likely one's

personal laptop), but runs it on a remote server (for us,

linserv1.cims.nyu.edu).

This requires three steps.

I have supposedly given you each an account on linserv1 (and access), which takes care of step 1. Accessing linserv1 and access is different for different client (laptop) operating systems.

seelinserv1, but can

seeaccess. So from outside (you play the Duo/MFA game and) log into access; then you are inside.

If you receive a message from linserv1 about an authentication failure, please follow the advice below from the systems group.

The first line of defense in all cases of authentication failure is to attempt a password reset. Please visit https://cims.nyu.edu/webapps/password/reset to do so. Within 15 minutes of a password reset submission, instructions to retrieve the new password will be sent to xyz123@nyu.edu. Please e-mail helpdesk@cims.nyu.edu in the event that the password reset either fails, or that the new password does not work (be sure to preface your ssh command with your username, e.g. ssh xyz123@access.cims.nyu.edu).

Good methods for obtaining help include

This course uses (and teaches) the C programming language. You must write your labs in C (or C++, but we will not teach the latter). Moreover C, but not C++, will appear on exams.

The rules for incompletes and grade changes are set by the school and not the department or individual faculty member.

The rules set by CAS can be found in <http://cas.nyu.edu/object/bulletin0608.ug.academicpolicies.html>, which states:

The grade of I (Incomplete) is a temporary grade that indicates that the student has, for good reason, not completed all of the course work but that there is the possibility that the student will eventually pass the course when all of the requirements have been completed. A student must ask the instructor for a grade of I, present documented evidence of illness or the equivalent, and clarify the remaining course requirements with the instructor.

The incomplete grade is not awarded automatically. It is not used when there is no possibility that the student will eventually pass the course. If the course work is not completed after the statutory time for making up incompletes has elapsed, the temporary grade of I shall become an F and will be computed in the student's grade point average.

All work missed in the fall term must be made up by the end of the following spring term. All work missed in the spring term or in a summer session must be made up by the end of the following fall term. Students who are out of attendance in the semester following the one in which the course was taken have one year to complete the work. Students should contact the College Advising Center for an Extension of Incomplete Form, which must be approved by the instructor. Extensions of these time limits are rarely granted.

Once a final (i.e., non-incomplete) grade has been submitted by the instructor and recorded on the transcript, the final grade cannot be changed by turning in additional course work.

This email from the assistant director, describes the policy.

Dear faculty, The vast majority of our students comply with the department's academic integrity policies; see www.cs.nyu.edu/web/Academic/Undergrad/academic_integrity.html www.cs.nyu.edu/web/Academic/Graduate/academic_integrity.html Unfortunately, every semester we discover incidents in which students copy programming assignments from those of other students, making minor modifications so that the submitted programs are extremely similar but not identical. To help in identifying inappropriate similarities, we suggest that you and your TAs consider using Moss, a system that automatically determines similarities between programs in several languages, including C, C++, and Java. For more information about Moss, see: http://theory.stanford.edu/~aiken/moss/ Feel free to tell your students in advance that you will be using this software or any other system. And please emphasize, preferably in class, the importance of academic integrity. Rosemary Amico Assistant Director, Computer Science Courant Institute of Mathematical Sciences

Yifeng Ko <yk1962@nyu.edu> is the official tutor for this course. He will announce his schedule.

The tutoring will be via zoom. I will give more details about the tutoring zoom when I get them.

Remark: The chapter/section numbers for the material on C, agree with Kernighan and Plauger. However, the material is quite standard so, as mentioned before, if you already own a C book that you like, it should be fine.

Since Java includes much of C, my treatment can be very brief for the parts in common (e.g., control structures).

You should be reading the first few chapters of K&R or Dive into Systems for the next few lectures.

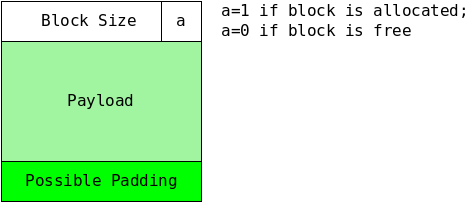

C programs consist of functions, which contain statements, and variables, the latter store values.

Hello WorldFunction

#include <stdio.h>

main() {

printf("Hello, world\n");

}

Although this program works, the second line should really be

int main(int argc, char *argv[]) {

I know this looks weird for now but remember how long it took you to

really understand

public static void main (String[] args)

Like Java.

Like Java

The program on the right is trivial. However, I wish to use it to introduce lvalues and rvalues. Each variable (in this program x and y) has two values associated with it: its address and the contents of that address. The latter is often called the value of the variable.

main() {

int x=5, y=8;

y = x+2;

}

Consider the program's assignment statement y = x+2;. To evaluate the right hand side (RHS) we need to know that the value of x is 5; we are not interested in knowing the address in which this 5 is stored. This value, 5, is called the rvalue of x because it is what is needed when x occurs on the RHS. In contrast the fact that 8 is the rvalue of y is not relevant since y does not occur on the RHS.

The LHS contains just y. But the fact that y has the value (specifically the rvalue) 8, is not relevant. What is relevant is the address of y since that is where the system must store the 7 that results from the addition. The address of y is called its lvalue since it is what is needed when y occurs on the LHS.

#include <stdio.h>

main() {

int n = 0, *pn;

pn = &n;

*pn = 33;

printf("n = %d\n", n);

}

This idea of addresses is a central theme of CSO because it is one key in understanding how Computer Systems are Organized.

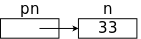

The program on the far right is actually correct and prints "n = 33".

The beginnings of an explanation is the diagram on the near right:

pn is a pointer to n, the (r)value of

pn is the lvalue (aka the address) of n.

#include <stdio.h>

main() {

int F, C;

int lo=0, hi=300, incr=20;

for (F=lo; F<=hi; F+=incr) {

C = 5 * (F-32) / 9;

printf("%d\t%d\n", F, C);

}

}

right amountof space to print the corresponding argument.

#include <stdio.h>

#define LO 0

#define HI 300

#define INCR 20

main() {

int F;

for (F=LO; F<=HI; F+=INCR)

printf("%3d\t%5.1f\n", F,

(F-32)*(5.0/9.0));

}

The simplest (i.e., most primitive) form of character I/O is getchar() and putchar(), which read and print a single character.

Both getchar() and putchar() are declared in stdio.h.

#include <stdio.h>

main() {

int c;

while ((c = getchar()) != EOF)

putchar(c);

}

File copy is conceptually trivial: getchar() a char and then putchar() this char until eof. The code is on the right and does require some comment despite is brevity.

extraparens, which are definitely not extra.

Homework: (1-7) Write a (C-language) program to print the value of EOF. (This is 1-7 in the book but I realize not everyone will have the book so I will type the problems into the notes.)

Homework: Write a program to copy its input to its output, replacing each string of one or more blanks by a single blank.

while (getchar() != EOF) ++numChars; for (numChars = 0; getchar() != EOF; ++numChars);

This is essentially a one-liner, which I have written in two different ways: once with a while loop and once with a for loop.

Now we need two tests: end-of-line and end-of-input. Perhaps the following is really a two-liner, but it does have only one semicolon.

while ((c = getchar()) != EOF) if (c == '\n') ++numLines;

So if a file has no newlines, it has no lines. Demo this with echo -n >noEOF "hello"

The Unix wc program prints the number of characters, words, and lines in the input. It is clear what the number of characters means. The number of lines is the number of newlines (so if the last line doesn't end in a newline, it doesn't count). The number of words is less clear. In particular, what should be the word separators?

#include <stdio.h>

#define WITHIN 1

#define OUTSIDE 0

main() {

int c, num_lines, num_words, num_chars;

int within_or_outside = OUTSIDE;

num_lines = num_words = num_chars = 0;

while ((c = getchar()) != EOF) {

++num_chars;

if (c == '\n')

++num_lines;

if (c == ' ' || c == '\n' || c == '\t')

within_or_outside = OUTSIDE;

else if (within_or_outside == OUTSIDE) {

// starting a word

++num_words;

within_or_outside = WITHIN;

}

}

printf("%d %d %d\n", num_lines, num_words, num_chars);

}

Homework: (1-12) Write a program that prints its input one word per line.

Remark: Class accounts on linserv1.

First round of your class accounts for 201-003 and 202-002 were

created tonight, and students will receive a welcome message if they

are getting a new account. Any student who previously had a CIMS

account in the past (whether or not it is active) will not get an

email but their account will be adjusted as necessary for use for

your class. The password reset link may be useful especially to

those students:

https://cims.nyu.edu/webapps/password/reset

We will re-run the class account creation scripts daily until the

drop deadline.

Thanks,

Shirley

Remark: The tutor has revised the hours. The hours listed in section 0.10 of these notes have been updated.

We are hindered in our examples because we don't yet know how to input anything other than characters and haven't yet written the program to convert a string of characters into an integer (easy) or (significantly harder) a floating point number.

#include <stdio.h>

#define N 10 // imagine you read in N

main() {

int i;

float x, sum=0, mu;

for (i=0; i<N; i++) {

x = i; // imagine you read in x

sum += x;

}

mu = sum / N;

printf("The mean is %f\n", mu);

}

#include <stdio.h>

#define N 10 // imagine you read in N

#define MAXN 1000

main() {

int i;

float x[MAXN], sum=0, mu;

for (i=0; i<N; i++) {

x[i] = i; // imagine you read in x[i]

}

for (i=0; i<N; i++) {

sum += x[i];

}

mu = sum / N;

printf("The mean is %f\n", mu);

}

#include <stdio.h>

#include <math.h>

#define N 5 // imagine you read in N

#define MAXN 1000

main() {

int i;

double x[MAXN], sum=0, mu, sigma;

for (i=0; i<N; i++) {

x[i] = i; // imagine you read in x[i]

sum += x[i];

}

mu = sum / N;

printf("The mean is %f\n", mu);

sum = 0;

for (i=0; i<N; i++) {

sum += pow(x[i]-mu,2);

}

sigma = sqrt(sum/N);

printf("The std dev is %f\n", sigma);

}

I am sure you know the formula for the mean (average) of N numbers: Add the numbers and divide by N. The mean is normally written μ. The standard deviation is the RMS (root mean square) of the deviations-from-the-mean, it is normally written σ. Symbolically, we write μ = ΣXi/N and σ = √(Σ((Xi-μ)2)/N). (When computing σ we sometimes divide by N-1 not N. Ignore the previous sentence.)

The first program on the right naturally reads N, then reads N numbers, and finally computes the mean of the latter. There is a problem; we don't know how to read numbers.

So I faked it by having N a symbolic constant and making x[i]=i.

I do not like the second version with its gratuitous array. It is (a little) longer, slower, and more complicated. Much worse it takes space (i.e., requires memory) proportional to N, for no reason. Hence it might not run at all for large N and small machines. However, I have seen students write such programs. Apparently, there is an instinct to use a three step procedure for all programming assignments:

But that is silly if, as in this example, you no longer need each value after you have read the next one.

The last example is a good use of arrays for computing the standard deviation using the RMS formula above. We do need to keep the values around after computing the mean so that we can compute all the deviations from the mean and, using these deviations, compute the standard deviation.

Note that, unlike Java, no use of new (or the

C analogue

malloc()) appears.

Arrays declared as in this program have a lifetime of the routine in which they are declared. Specifically sum and x are both allocated when main is called and are both freed when main is finished.

Note the declaration int x[MAXN] in the third version. In C, to declare a complicated variable (i.e., one that is not a primitive type like int or char), you write what has to be done to the variable to get one of the primitive types.

In C if we have int X[10]; then writing X in your

program is the same as writing &X[0].

& is the address of

operator.

More on this later when we discuss pointers.

There is of course no limit to the useful functions one can write. Indeed, the main() programs we have written above are all functions.

#include <stdio.h>

// Determine letter grade from score

// Demonstration of functions

char letter_grade (int score) {

if (score >= 90) return 'A';

else if (score >= 80) return 'B';

else if (score >= 70) return 'C';

else if (score >= 60) return 'D';

else return 'F';

} // end function letter_grade

main() {

short quiz;

char grade;

quiz = 75; // should read in quiz

grade = letter_grade(quiz);

printf("For a score of %3d the grade is %c\n",

quiz, grade);

} // end main

cc -o grades grades.c; ./grades

For a score of 75 the grade is C

A C program is a collection of functions (and global variables). Exactly one of these functions must be called main and that is the function at which execution begins.

One important issue is type matching. If a function f takes one int argument and f is called with a short, then the short must be converted to an int. Since this conversion is widening, the compiler will automatically coerce the short into an int, providing it knows that an int is required.

It is fairly easy for the compiler to know all this providing f() is defined before it is used, as in the code on the right.

We see on the right a function letter_grade defined. It has one int argument and returns a char.

Finally, we see the main program that calls the function.

The main program uses a short to hold the numerical grade and then calls the function with this short as the argument. The C compiler generates code to coerce this short value to the int required by the function.

// Average and sort array of random numbers

#define NUMELEMENTS 50

void sort(int A[], int n) {

int temp;

for (int x=0; x<n-1; x++)

for (int y=x+1; y<n; y++)

if (A[x] < A[y]) {

temp = A[y];

A[y] = A[x];

A[x] = temp;

}

}

double avg(int A[], int num) {

int sum = 0;

for (int x=0; x<n; x++)

sum = sum + A[x];

return (sum / n);

}

main() {

int table[NUMELEMENTS];

double average;

for (int x=0; x<NUMELEMENTS; x++) {

table[x] = rand(); /* assume defined */

printf("The elt in pos %d is %d\n",

x, table[x]);

}

average = avg(table, NUMELEMENTS );

printf("The average is %5.1f ", average);

sort(table, NUMELEMENTS );

for (x-=; x<NUMELEMENTS; x++)

printf("The element in position %3d is %3d \n",

x, table[x]);

}

The next example illustrates a function that has an array argument.

Remember that in a C declaration you decorate

the item being

declared with enough stuff (e.g., [], *) so that the result is a

primitive type such as int, double, or

char.

The function sort has two parameters, the second one n is simply an int. The parameter A, however, is more complicated. It is the kind of thing that when you take an element of it, you get an int.

That is, A is an array of ints.Unlike the array example in section 1.6, A does not have an explicit upper bound on its index. This is because the function can be called with arrays of different sizes. Since the function needs to know the size of the array (look at the for loops), a second parameter n is used for this purpose.

This example has two function calls: main calls both avg and sort. Looking at the call from main to sort we see that table is assigned to A and NUMELEMENTS is assigned to n. Looking at the code in main itself, we see that indeed NUMELEMENTS is the size of the array table and thus in sort, n is the size of A.

All seems well provided the called function appears before the function that calls it. Our examples have followed this convention.

So far so good; but if f calls g and (recursively) g calls f, we are in trouble. How can we have f before g, and also have g before f?

This will be answered very soon.

#include <stdio.h>

int f(int a, int b) {

a = a+b;

return a;

}

main() {

int x = 10;

int y = 20;

int ans;

ans = f(x, y);

}

Arguments in C are passed by value (the same as Java does for arguments that are not objects).

The simple example on the right illustrates a few points. First, some terminology. The variables a and b in f() are called parameters of f() whereas, x and y are called arguments of the call f(x, y).

When main() calls f() the values in the arguments are copied into the corresponding parameters. However, when f() returns, the values now in the parameters are NOT copied back to the arguments. This explains why the value in ans differs from the final value in x.

Try to avoid the fairly common error of assuming Copy-in AND Copy-out semantics.

Start Lecture #02

Remark: Homework #1 was entered on Brightspace 3 September; it is due 10 September.

Remark: The tutor sent a msg giving hours.

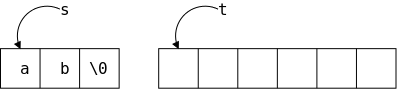

Unlike Java, C does not have a string datatype. A string in C is an array of chars. String operations like concatenate and copy (assignment) become functions in C. Indeed there are a number of standard library routines that act on strings.

Strings in C are null terminated

.

That is, a string of length 5 actually contains 6 characters, the 5

characters of the string itself and a sixth character = '\0' (called

null) indicating the end of the string.

This is a big deal.

Our goal is a program that reads lines from the terminal, converts them to C strings by appending '\0', and prints the longest line found. Pseudo code would be

while (more lines)

read line

if (line longer than previous longest)

save line and its length

print the saved line

Thus we need the ability to read in a line and the ability to save a line. We write two functions getLine() and copy() for these tasks (the book uses getline (all lower case), but that doesn't compile for me since there is a library routine in stdio.h with the same name and different signature).

#include <stdio.h> #define MAXLINE 1000 int getLine(char line[], int maxline); void copy(char to[], char from[]);

int main() { int len, max; char line[MAXLINE], longest[MAXLINE]; max = 0; while ((len=getLine(line,MAXLINE))>0) if (len > max) { max = len; copy(longest,line); } if (max>0) printf("%s", longest); return 0; }

int getLine(char s[], int lim) { int c, i; for (i=0; i<lim-1 && (c=getchar())!=EOF && c!='\n'; ++i) s[i] = c; if (c=='\n') { s[i]= c; ++i; } s[i] = '\0'; return i; }

void copy(char to[], char from[]) { int i; i=0; while ((to[i] = from[i]) != '\0') ++i; }

Given the two supporting routines, main is fairly simple, needing only a few small comments.

declare (or define) before useso either main would have to come last or the declarations are needed. Since only main uses the routines, the declarations could have been in main but it is common practice to put them outside as shown. Although these routines are not recursive (and hence we could have placed the called routine before the caller), declarations like the one shown are needed for recursive routines.

This function is discussed further in recitation.

The line is returned in the parameter s[], the function

itself returns the length.

The for continuation condition

in getLine

is rather complex.

(Note that the for loop has an empty body; the entire

action occurs in the for statement itself.)

The condition part of the for tests for 3 situations.

Perhaps it would be clearer if the test was simply i<lim-1 and the rest was done with if-break statments inside the loop.

In C, if you write f(x)+g(y)+h(z) you have

no guarantee of the order the functions will be invoked.

(Thus the program would be non-deterministic if g() modified

something used by f().)

However, the && and || operators do

guarantee left-to-right ordering to enforce short-circuit

condition evaluation.

This ordering is important here since the test for '\n'

must be performed after the getchar() has

assigned its value to c.

The copy() function is declared and defined to return void.

Homework: Simplify the for condition in getline() as just indicated.

#include <stdio.h>

#include <math.h>

#define A +1.0 // should read

#define B -3.0 // A,B,C

#define C +2.0 // using scanf()

void solve (float a, float b, float c);

int main() {

solve(A,B,C);

return 0;

}

void solve (float a, float b, float c) {

float d;

d = b*b - 4*a*c;

if (d < 0)

printf("No real roots\n");

else if (d == 0)

printf("Double root is %f\n", -b/(2*a));

else

printf("Roots are %f and %f\n",

((-b)+sqrt(d))/(2*a),

((-b)-sqrt(d))/(2*a));

}

#include <stdio.h>

#include <math.h>

#define A +1.0 // main() should

#define B -3.0 // read A,B,C

#define C +2.0 // using scanf()

void solve(void); // declaration of solve()

float a, b, c; // definitions

int main() { // definition of main()

extern float a, b, c; // declarations

a=A;

b=B;

c=C;

solve();

return 0;

}

void solve () { // definition of solve()

extern float a, b, c; // declarations

float d;

d = b*b - 4*a*c;

if (d < 0)

printf("No real roots\n");

else if (d == 0)

printf("Double root is %f\n", -b/(2*a));

else

printf("Roots are %f and %f\n",

((-b)+sqrt(d))/(2*a),

((-b)-sqrt(d))/(2*a));

}

The two programs on the right find the real roots (no imaginary numbers) of the quadratic equation

ax2+bx+c

They proceed by using the standard technique of first calculating the discriminant

d = b2-4ac

Since these programs deal only with real roots, they punt when d<0.

The programs themselves are not of much interest.

Indeed a Java version would be too easy

to be a midterm exam

question in 101.

Our interest is confined to the way in which the

coefficients a, b, and c are passed from

the main() function to the helper

routine solve().

The main() function calls a function solve() passing it as arguments the three coefficients, A,B,C.

There is little to say. Method 1 is a simple program and uses nothing new.

The second main() program communicates with solve() using external variables rather than arguments/parameters.

declare (or define) before use. If you define before using, you don't need to also declare. But if you have recursion (f() calls g() and g() calls f()), you can't have both definitions before the corresponding uses so you

Similar to Java: A variable name must begin with a letter and then can use letters and numbers. An underscore is a letter, but you shouldn't begin a variable name with one since that is conventionally reserved for library routines. Keywords such as if, while, etc are reserved and cannot be used as variable names.

C has very few primitive types.

naturalsize of an integer on the host machine.

There are qualifiers that can be added. One pair is long/short, which are used with int. Typically short int is abbreviated short and long int is abbreviated long.

long is guaranteed be at least as big as int, which is guaranteed to be as least as big as short.

There is no short float, short double, or long float. The type long double specifies extended precision.

The qualifiers signed or unsigned can be applied to char or any integer type. They basically determined how the sign bit is interpreted. An unsigned char uses all 8 bits for the integer value and thus has a range of 0–255; whereas, a signed char has an integer range of -128–127.

Note: We will have much more to say about data types, e.g., signed and unsigned, next month after we finish our treatment of C.

A normal integer constant such as 123 is an int, unless it is too big in which case it is a long. But there are other possibilities.

There are no string variables in C. Although there are no string variables, there are string constants, written as zero or more characters surrounded by double quotes. A null character '\0' is automatically appended.

Alternative method of assigning integer values to symbolic names.

enum Boolean {false, true}; // false is zero, true is 1

enum Month {Jan=1, Feb, Mar, Apr, May, Jun, Jul, Aug, Sep, Oct, Nov, Dec};

Simple examples.

int x, y; char c; double q1, q2;

(Stack allocated, i.e., local) arrays are simple since the entire array is allocated not just a reference (no new/malloc required).

int x[10];

Initializations may be given.

int x=5, y[2]={44,6}; z[]={1,2,3};

char str[]="hello, world\n";

The qualifier const makes the variable read only so it must be initialized in the declaration.

Mostly the same as java.

Please do not call % the mod (or modulo) operator, unless you know that the operands are positive. The correct name for % is the remainder operator.

Again very little difference from Java.

Please remember that && and || are required to be short-circuit operators. That is, they evaluate the right operand only if needed.

There are two kinds of conversions: automatic conversions, called coercions, and explicit conversions, called casts.

C coerces narrow

arithmetic types to wide ones.

{char, short} → int → long

float → double → long double

long → float // precision can be lost

int atoi(char s[]) {

int i, n=0;

for (i=0; s[i]>='0' && s[i]<='9'; i++)

n = 10*n + (s[i]-'0'); // assumes ascii

return n;

}

The program on the right (ascii to integer) converts a character string representing an integer to the integral value.

Unsigned coercions are more complicated; you can read about them in the book or wait a few weeks when we will cover them.

The syntax

(type-name) expression

converts the value to the type specified. Note that e.g., (double) x converts the value of x; it does not change x itself.

Homework: (2.3) Write the function htoi(s), which converts a string of hexadecimal digits (including an optional 0x or 0X) into its equivalent integer value. The allowable digits are 0 through 9, a through f, and A through F.

The same as Java.

Remember that neither x++ nor ++x are the same as x=x+1 because, with the operators, x is evaluated only once, which becomes important when x is itself an expression with side effects.

x[i++]++ // increments some (which?) element of an array x[i++] = x[i++]+1 // puts incremented value in ANOTHER slot

In fact the last line is illegal (what order do you do the two increments of i?)

Homework: (2-4). Write an alternate version of squeeze(s1,s2) (defined in the text) that deletes each character in the string s1 that matches any character is the string s2.

#include <stdio.h>

int main(void) {

int x = 4, y = 1;

printf("x&y=%i x&&y=%i\n", x&y, x&&y);

return 0;

}

x%y=0 x&&y=1

The same as Java

Note that x&&y is different from x&y.

int bitcount (unsigned x) {

int b;

for (b=0; x!=0; x>>= 1)

if (x&01) // octal (not needed)

b++;

return b;

}

The same as Java: += -= *= /= %= <<= >>= &= ^= |=

The program on the right counts how many bits of its argument are 1. Right shifting the unisigned x causes it to be zero-filled. Anding with a 1, gives the LOB (low order bit). Writing 01 indicates an octal constant (any integer beginning with 0; similarly starting with 0x indicates hexadecimal). Both are convenient for specifying specific bits (because both 8 and 16 are powers of 2). Since the constant in this case has value 1, the 0 has no effect.

printf("You enrolled in %d course%s.\n", n, (n==1) ? "" : "s");

The same as Java:

Homework: (2-10). Rewrite the function lower(), which converts upper case letters to lower case with a conditional expression instead of if-else.

| Operators | Associativity |

|---|---|

| () [] -> . | left to right |

| ! ~ ++ -- + - * & (type) sizeof | right to left |

| * / % | left to right |

| + - | left to right |

| << >> | left to right |

| < <= > >= | left to right |

| == != | left to right |

| & | left to right |

| ^ | left to right |

| | | left to right |

| && | left to right |

| || | left to right |

| ?: | right to left |

| = += -= *= /= %= &= ^= |= <<= >>= | right to left |

| , | left to right |

The table on the right is copied (hopefully correctly) from the book. It includes all operators, even those we haven't learned yet. I certainly don't expect you to memorize the table. Indeed one of the reasons I typed it in was to have an online reference I could refer to since I do not know all the precedences.

Homework: Check the table above for typos and report any you find.

Not everything is specified. For example if a function takes two arguments, the order in which the arguments are evaluated is not specified. Consider f(x++,x++);

Also the order in which operands of a binary operator like + are evaluated is not specified. So f() could be evaluated before or after g() in the expression f()+g(). This becomes important if, for example, f() alters a global variable that g() reads.

#include <stdio.h>

void main (void) {

int x=3, y;

y = + + + + + x;

y = - + - + + - x;

y = - ++x;

y = ++ -x;

y = ++ x ++;

y = ++ ++ x;

}

Question: Which of the expressions on the right are

illegal?

Answer: The last three.

They apply ++ to values not variables (i.e, to rvalues not

lvalues).

I mention this because at this point in a previous semester there was some discussion about ++ ++. The distinction between lvalues and rvalues will become very relevant when we discuss pointers.

Since pointers have presented difficulties for students in the past, I use every opportunity that arises to give ways of looking at the problem.

Since ++ does an assignment (as well as an addition) it needs a place to put the result, i.e., an lvalue.

Start Lecture #03

int t[]={1,2};

int main() {

22;

return 0;

}

C is an expression language; so the constant 22

and

the assignment x=33

have values (i.e., rvalues).

One simple statement is an expression followed by a semicolon;

For example, the program on the right is legal.

As in Java, a group of statements can be enclosed in braces to form a compound statement or block. We will have more to say about blocks later in the course.

Same as Java.

Same as Java.

Same as Java.

Same as Java.

#include <ctype.h>

int atoi(char s[]) {

int i, n, sign;

for (i=0; isspace(s[i]); i++) ;

sign = (s[i]=='-') ? -1 : 1;

if (s[i]=='+' || s[i]=='-')

i++;

for (n=0; isdigit(s[i]); i++)

n = 10*n + (s[i]-'0');

return sign * n;

}

Same as Java. As we shall see, the loops in the book show the hand of a master.

The program on the right (ascii to integer) illustrates several points, as well as being extremely useful in its own right.

workis done in the termination test.

for (i=0, j=0; i+j<n; i++,j+=3)

printf ("i=%d and j=%d\n", i, j);

If two expressions are separated by a comma, they are evaluated left to right and the final value is the value of the one on the right. This operator often proves convenient in for statements when two variables are to be incremented.

Same as Java.

Same as Java.

The syntax is

goto label;

for (...) {

for (...) {

while (...) {

if (...) goto out;

}

}

}

out: printf("Left 3 loops\n");

The label has the form of a variable name. A label followed by a colon can be attached to any statement in the same function as the goto. The goto transfers control to that statement.

Note that a break in C (or Java) only leaves one level of looping so would not suffice for the example on the right.

The goto statement was deliberately omitted from Java. Poor use of goto can result in code that is hard to understand and hence goto is rarely used in modern practice.

The goto statement was much more commonly used in the past.

Homework: Write a C function escape(char s[], char t[]) that converts the characters newline and tab into two character sequences \n and \t as it copies the string t to the string s. Use the C switch statement. Also write the reverse function unescape(char s[], char t[]).

#include <stdio.h>

#define MAXLINE 100

int getline(char line[], int max);

int strindex(char source[], char searchfor[]);

char pattern[]="x y"; // "should" be input

int main() {

char line[MAXLINE];

int found=0;

while (getline(line,MAXLINE) > 0)

if (strindex(line, pattern) >= 0) {

printf("%s", line);

found++;

}

return found;

}

int getline(char s[], int lim) {

int c, i;

i = 0;

while (--lim>0 && (c=getchar())!=EOF

&& c!='\n')

s[i++] = c;

if (c == '\n')

s[i++] = c;

s[i] = '\0';

return i;

}

int strindex(char s[], char t[]) {

int i, j, k;

for(i=0; s[i]!='\0'; i++) {

for (j=i,k=0; t[k]!='\0' && s[j]==t[k];

j++,k++) ;

if (k>0 && t[k]=='\0')

return i;

}

return -1;

}

The Unix utility grep (Global Regular Expression Print) prints all occurrences of a given string (or more generally a regular expression) from standard input. A very simplified version is on the right.

The basic program is

while there is another line

if the line contains the string

print the line

Getting a line and seeing if there are more is getline(); a slightly revised version is on the right. Note that a length of 0 means EOF was reached; an "empty" line still has a newline char '\n' and hence has length 1.

Printing the line is printf().

Checking to see if the string is present in the line is the new code. The choice made was to define a function strindex() that is given two strings s and t and returns the position in s (i.e., the index in the array) where t occurs. strindex() returns -1 if t does not occur in s.

The program is on the right; further comments follow.

C-style, i.e., the declarations specify what you do to each parameter in order to get a char or int. These are not definitions of getline() and strindex(), which are given later. The declarations include only the header information and not the body; they describe only how to use the functions, not what the functions do.

Note that a function definition is of the form

return-type function-name(parameters) {

declarations and statements

}

The default return type is int, but I recommend not utilizing this fact and instead always declaring the return type.

The return statement is like Java.

The book correctly gives all the defaults and explains why they are what they are (compatibility with previous versions of C). I find it much simpler to always

A C program consists of external objects, which are either variables or functions.

Variables and functions defined outside any function are called external.

Variables defined inside a function are called internal.

Functions defined inside another function would also be

called internal; however standard C does not have internal

functions.

That is, you cannot in C define a function inside another function.

In this sense C is not a fully block-structured language

(see block structure

below).

As stated, a variable defined outside functions is external. All subsequent functions in that file will see the definition (unless it is overridden by an internal definition).

External variables can be used, instead of parameters/arguments to pass information between functions. It is sometimes convenient not to repeat a long list of arguments common to several functions. However, using external variables also has problems: It makes the exact information flow harder to deduce when reading the program.

When we solved quadratic equations in section 1.10 our second method used external variables.

Scope rules determine the visibility of names in a program. In C the scope rules are fairly simple.

Since C does not have internal functions, all internal names are variables. Internal variables can be automatic or static. We have seen only automatic internal variables, and this section will discuss only them. Static internal variables are discussed in section 4.6 below.

An automatic variable defined in a function is visible from the

definition until the end of the function (but see

If the same variable name is defined internal to two functions, the variables are unrelated.

Parameters of a function are the same as local variables in these respects.

int main(...) {...}

int value;

float joe(...) {...}

float sam;

int bob(...) {...}

An external name (function or variable) is visible from the point of its definition (or declaration as we shall see below) until the end of that file. In the example on the right

There can be only one definition of a given external name in the entire program (even if the program includes many files). However, there can be multiple declarations of the same name.

A declaration describes a variable (gives its type) but does not allocate space for it. A definition both describes the variable and allocates space for it.

extern int X; extern double z[]; extern float f(double y);

Thus we can put declarations of a variable X, an array z[], and a function f() at the top of every file and then X and z are visible in every function in the entire program. Declarations of z[] do not give its size since space is not allocated; the size is specified in the definition.

If declarations of joe() and bob() were added at the top of the previous example, then main() would be able to call them.

If an external variable is to be initialized, the initialization must be put with the definition, not with a declaration.

How to tell apart declarations or definitions

#include <stdio.h>

double f(double x);

int main() {

float y;

int x = 10;

printf("x in main is %i\n", x);

printf("f(x) is %f\n", f(x));

return 0;

}

double f(double x) {

printf("x in f is %f\n", x);

return x;

}

x in main is 10

x in f is 10.000000

f(x) is 10.000000

The code on the right shows how valuable having the types declared can be. The function f() is the identity function. However, main() knows that f() takes a double so the system automatically converts x to a double when calling f().

It would be awkward to have to change every file in a big programming project when a new function was added or had a change of signature (types of arguments and return value). What is done instead is that all the declarations are included in a single header file. The definitions remain scattered over many files. (Each function is naturally defined only once).

For now assume the entire program is in one directory. Create a file with a name like functions.h containing the declarations of all the functions. Then early in every .c file write the line

#include "functions.h"

Note the quotes not angle brackets, which indicates that

functions.h is located in the current directory, rather

than in the standard placethat is used for <>.

We need to distinguish the lifetime of the value in a variable from the visibility of the variable.

Consider the variable x in the trivial example

void f(void) { int x = 5; printf(%d\n", x++); }

No matter how many times f() is called, the value printed will always be 5. This is because each call re-initializes x to 5. We say that the lifetime of x's value is one execution of the function. In contrast an external variable maintains values assigned to it; its lifetime is permanent.

In addition, x, a local variable, is not visible in any other function. That is, the visibility of x is local to the function in which it is defined.

The adjective static has very different meanings when applied to internal and external variables.

int main(...){...}

static int b16;

void sam(...){...}

double beth(...){...}

If an external variable is defined with the static attribute, its visibility is limited to the current file. In the example on the right b16 is naturally visible in sam() and beth(), but not main(). The addition of static means that if another file has a definition or declaration of b16, with or without static, the two b16 variables are not related.

If an internal variable is declared static, its lifetime is the entire execution of the program. This means that if the function containing the variable is called twice, the value of the variable at the start of the second call is the final value of that variable at the end of the first call.

As we know, there are no internal functions in standard C. If an (external) function is defined to be static, its visibility is limited to the current file (as for static external variables).

Ignore this section. Register variables were useful when compilers were primitive. Today, compilers can generally decide, better than programmers, which variables should be put in register.

Standard C does not have internal functions, that is you cannot in C define a function inside another function. In this sense C is not a fully block-structured language.

Of course C does have internal variables; we have used them in almost every example. That is, most functions we have written (and will write) have variables defined inside them.

#include <stdio.h>

int main(void) {

int x = 5;

printf ("The value of outer x is %d\n", x);

{

int x = 10;

printf ("The value of inner x is %d\n", x);

}

printf ("The value of the outer x is %d\n", x);

return 0;

}

The value of outer x is 5.

The value of inner x is 10.

The value of outer x is 5.

Also C does have block structure with respect to variables.

This means that inside a block (remember that a block is a bunch of

statements surrounded by {}) you can define a new variable

with the same name as the old one.

These two variables are

For example, the program on the right produces the output shown.

Remark: The gcc compiler for C does permit one to define a function inside another function. These are called nested functions. Some consider this gcc extension to be evil; we will not use it.

Note that we have used nested blocks many times without calling them out. Specifically, when you use {} to group the body of a for loop or the then portion of an if-then-else these also are blocks since they are enclosed by {}.

Homework: Write a C function int odd (int x) that returns 1 if x is odd and returns 0 if x is even. Can you do it without an if statement?

Static and external variables are, by default, initialized to zero. Automatic i.e., non-static, internal variables (the only kind left) are not initialized by default.

As in Java, you can write int X=5-2;. For external or static scalars, that is all you can do.

int x=4; int y=x-1;

For automatic, internal scalars the initialization expression can involve previously defined values as shown on the right (even function calls are permitted).

int BB[8] = {4,9,2}

int AA[] = {3,5,12,7};

char str[] = "hello";

char str[] = {'h','e','l','l','o','\0'}

You can initialize an array by giving a list of initializers as shown on the right.

The same as Java.

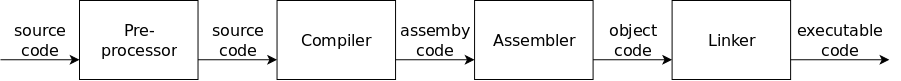

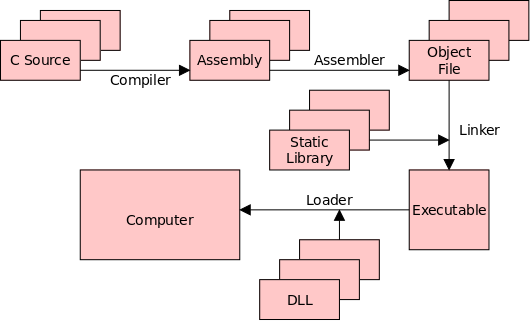

Normally, before the compiler proper sees your program, a utility called the C preprocessor is invoked to include files and perform macro substitutions.

#include <filename> #include "filename"

We have already discuss both forms of file inclusion.

In both cases the file mentioned is textually inserted at the point

of inclusion.

The difference between the two is that the first form looks for

filename in a system-defined standard place

;

whereas, the second form first looks in the current directory.

#define MAXLINE 20 #define MULT(A, B) ((A) * (B)) #define MAX(X, Y) ((X) > (Y)) ? (X) : (Y) #undef getchar

We have already used examples of macro substitution similar to the first line on the right. The second line, which illustrates a macro with arguments is more interesting.

Without all the parentheses on the RHS, the MULT macro

would still be legal, but would (sometimes) give the wrong answers.

Question: Why?

Answer: Consider MULT(x+4, y+3)

Note that macro substitution is not the same as a function call (with standard call-by-value or call-by-reference semantics). Even with all the parentheses in the third example you can get into trouble since MAX(a++,5) can increment a twice. If you know call-by-name from algol 60 fame, this will seem familiar.

We probably will not use the fourth form. It is used to un-define a macro from a library so that you can write another version.

There is some fancy stuff involving # in the RHS of the macro definition. See the book for details; I do not intend to use it.

#if integer-expr ... #elif integer-expr ... #else ... #endif

The C-preprocessor has a very limited set of control flow items. On the right we see how the C

if (cond1) ... else if (cond2) ... else .. end if

construct is written. The individual conditions are simple integer expressions consisting of integers, some basic operators and little else. Perhaps the most useful additions are the preprocessor function defined(name), which evaluates to 1 (true) if name has been #define'd, and the ! operator, which converts true to false and vice versa.

#if !defined(HEADER22) #define HEADER22 // The contents of header22.h // goes here #endif

We can use defined(name) as shown on the right to ensure that a header file, in this case header22.h, is included only once.

Question: How could a header file be included

twice unless a programmer foolishly wrote the same #include

twice?

Answer: One possibility is that a user might

include two systems headers h1.h and h2.h each of

which includes h3.h.

Two other directives #ifdef and #ifndef test whether a name has been defined. Thus the first line of the previous example could have been written ifndef HEADER22.

#if SYSTEM == MACOS

#define HDR "macos.h"

#elsif SYSTEM == WINDOWS

#define HDR "windows.h"

#elsif SYSTEM == LINUX

#define HDR "linux.h"

#else

#define HDR "empty.h"

#endif

#include HDR

On the right we see a slightly longer example of the use of preprocessor directives. Assume that the name SYSTEM has been set to the name of the system on which the current program is to be run (not compiled). Assume also that individual header files have been written for macos, windows, and linux systems. Then the code shown will include the appropriate header file.

Note: The quotes used in the various #defines for HDR are not required by #define, but instead are needed by the final #include.

public class X {

int a;

public static void main(String args[]) {

int i1;

int i2;

i1 = 1;

i2 = i1;

i1 = 3;

System.out.println("i2 is " + i2);

X x1 = new X();

X x2 = new X();

x1.a = 1;

x2 = x1; // NOT x2.a = x1.a

x1.a = 3;

System.out.println("x2.a is " + x2.a);

}

}

Pointers are a big difference between Java and C. You can read chapter 2 of Dive into Systems for another account of C pointers.

Much of the material on pointers has no explicit analogue in Java; it is there kept under the covers. If in Java you have an Object obj, then obj is actually what C would call a pointer. The technical term is that Java has reference semantics for all objects. In C this will all be quite explicit

To give a Java example, look at the snippet on the right. The first part works with integers. We define 2 integer variables; initialize the first; set the second to the first; change the first; and print the second. Naturally, the second has the initial value of the first, namely 1.

The second part deals with X, a trivial class, whose objects have just one data component, an integer called a. We mimic the above algorithm. We define two X's and work with their integer field (a). We then proceed as above: initialize the first integer field; set the second to the first; change the first; and print the second. The result is different from the above! In this case the second has the altered value of the first, namely 3.

The key difference between the two parts is that (in Java) simple scalars like i1 have value semantics; whereas objects like x1 have reference semantics. But enough Java, we are interested in C.

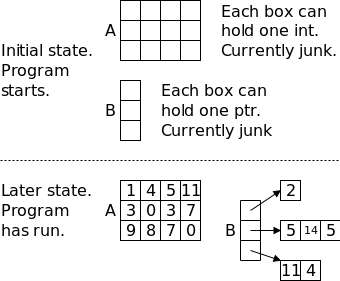

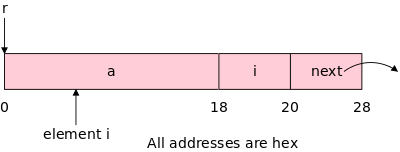

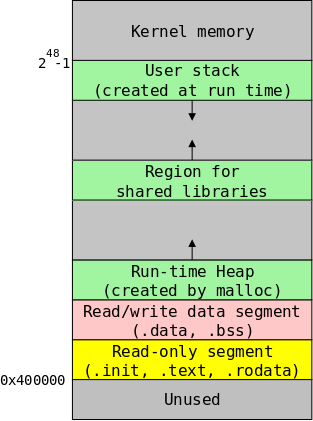

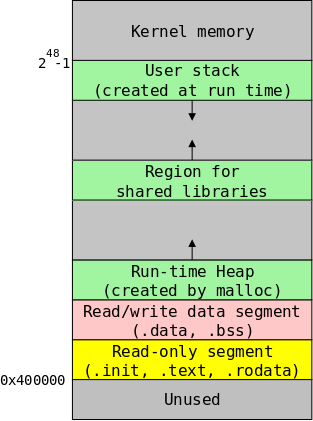

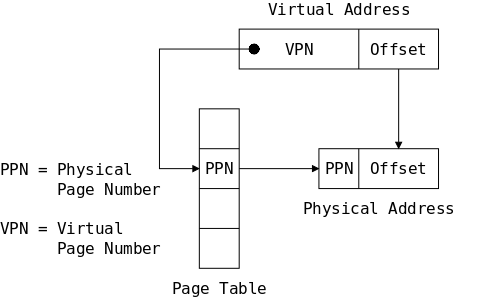

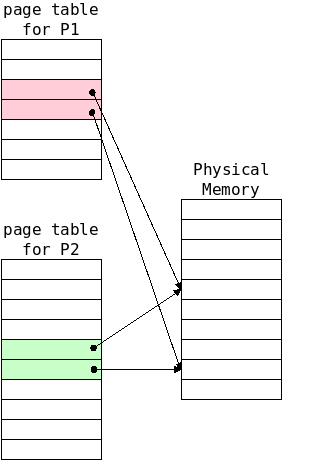

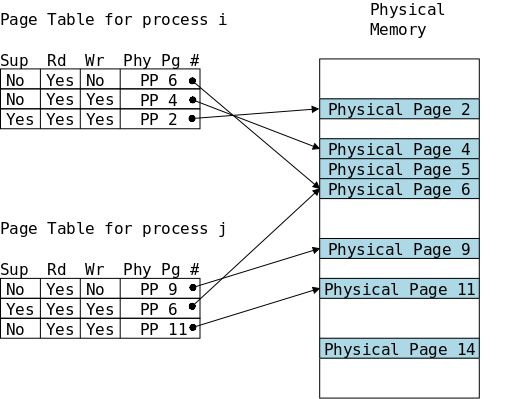

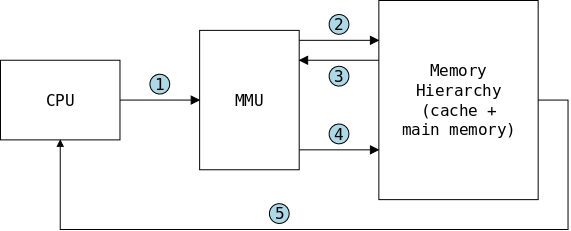

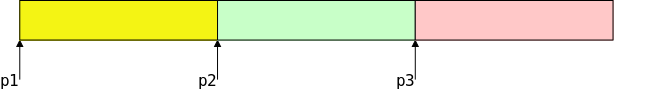

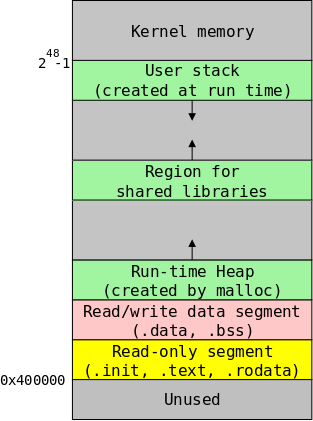

You will learn later this semester and again in 202, that the OS finagles memory in ways that would make Bernie Madoff smile. But, in large part thanks to those shenanigans, user programs can have a simple view of memory. For us C programmers, memory is just a large array of consecutively numbered locations.

The machine model we will use in this course is that the fundamental unit of addressing is a byte and a character (a char) exactly fits in a byte. Other types like short, int, double, float, long normally take more than one byte, but always a consecutive range of bytes.

One consequence of our memory model is that associated with int z=5; are two numbers. The first number is the address of the location in which z is stored. The second number is the value stored in that location; in this case that value is 5. The first number, the address, is often called the lvalue; the second number, the contents, is often called the rvalue. Why l and r? I know we did this already; I think it is worth repeating.

Consider

z = z + 1;

To evaluate the right hand side (RHS) we need to add 5 to 1.

In particular, we need the value contained in the memory location

assigned to z, i.e., we need 5.

Since this value is what is needed to evaluate the RHS of an

assignment statement it is called an rvalue.

Then we compute 6=5+1. Where should we put the 6? We look at the LHS and see that we put the 6 into z; that is, into the memory location assigned to z. Since it is the location that is needed when evaluating a LHS, the address is called an lvalue.

Start Lecture #04

As we have just seen, when a variable appears on the LHS, its lvalue or address is used. What if we want the address of a variable that appears on the RHS; how do we get it?

In a language like Java the answer is simple; we don't.

In C we use the unary operator & and write p=&x; to assign the address of x to p. After executing this statement we say that p points to x or p is a pointer to x. That is, after execution, the rvalue of p is the lvalue of x. In other words the value of p is the address of x.

int x=13;

Look at the declarations on the right. x is familiar; it is an integer variable initially containing 13. Specifically, the rvalue of x is (initially) 13. What about the lvalue of x, i.e., the location in which the 13 is stored? It is not an int; it is an address into which an int can be stored. Alternately said it is pointer to an int.

The unary prefix operator & produces the address of a variable, i.e., &x gives the lvalue of x, i.e. it gives a pointer to x.

The unary operator * does the reverse action. When * is applied to a pointer, it gives the object (object is used in the English not OO sense) pointed to. The * operator is called the dereferencing or indirection operator.

int x=13; int *p = &x;

Now look at the declaration of p on the right. It says that p is the kind of thing, that when you apply * to it you get an int, i.e., p is a pointer to an int. That is why we can initialize p to &x.

Note: Try to avoid the common error of thinking the second line on the right initializes *p to &x. It doesn't. It declares and initializes p not *p.

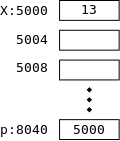

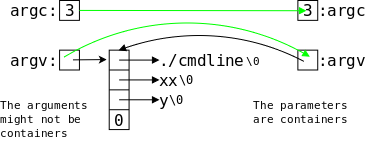

On the right we show how p and x might be stored in memory. After we finish with C we will study the memory model in more detail. Here I just give enough to understand that pointers like p are also variables that are stored just like ints, floats, and chars.

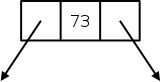

The basic storage unit on modern computers is a byte. We shall assume that a char fits perfectly in a byte. However, ints, floats, and pointers are bigger. Each requires several bytes. For today assume each is 4 bytes.

In the diagram on the right x happens to be stored in locations 5000-5003 (i.e., each box is 4 bytes). x has value 13; more precisely its rvalue is 13. Since the address of x is 5000, the lvalue of x is 5000.

The integer pointer p happens to be stored in 8040-8043; i.e., its address or lvalue happens to be 8040. Since p points to x, the rvalue of p equals the lvalue of x, which is 5000.

// part one of three int x=1; int y=2; int z[10]; int *ip; int *jp; ip = &x;

Consider the code sequence on the right (part one). The first 3 lines we have seen many times before; the next three are new. Recall that in a C declaration, all the doodads around a variable name tell you what you must do to the variable to get the base type at the beginning of the line. Thus the fourth line says that if you dereference ip (i.e., if you look at the contents of the address in ip, not of ip), you get an integer. Common parlance is to call ip an integer pointer (which is why I named it ip). Similarly, jp is another integer pointer.

At this point both ip and jp are uninitialized. The last line sets ip to the address, of x. Note that the types match, both ip and &x are pointers to an int.

// part two of three y = *ip; // L1 *ip = 0; // L2 ip = &z[0]; // L3 *ip = 0; // L4 jp = ip; // L5 *jp = 1; // L6

In part two, L1 sets y=1 as follows: ip now points to x, * does the dereference so *ip is x. Since we are evaluating the RHS, we take the contents not the address of x and get 1.

L2 sets x=0;. The RHS is clearly 0. Where do we put this zero? Look at the LHS: ip currently points to x, * does a dereference so *ip is x. Since we are on the LHS, we take the address and not the contents of x and hence we put 0 into x.

L3 changes ip; it now points to z[0]. So L4 sets z[0]=0;

Pointers can be used without the deferencing operator. L5 sets jp to ip. Since ip currently points to z[0], jp now does as well. Hence L6 sets z[0]=1;

// part three of three ip = &x; // L1 *ip = *ip + 10; // L2 y = *ip + 1; // L3 *ip += 1; // L4 ++*ip; // L5 (*ip)++; // L6 *ip++; // L7

Part three begins by re-establishing ip as a pointer to x so L2 increments x by 10 and L3 sets y=x+1;.

L4 increments x by 1 as does L5 (because the unary operators ++ and * are right associative).

L6 also increments x, but L7 does not.

By right associativity we see that the increment precedes the

dereference, so the pointer is incremented (not the pointee

).

The full story awaits section 5.4

below.

void bad_swap(int x, int y) {

int temp;

temp = x;

x = y;

y = temp;

}

The program on the right is what a novice programer just learning C (or Java) would write. It is supposed to swap the two arguments it is called with. However, it fails due to call by value semantics for function calls in C.

When another function calls swap(a,b) the values of the arguments a and b are transmitted to the parameters x and y and then swap() interchanges the values in x and y. But when swap() returns, the final values in x and y are NOT transmitted back to the arguments: a and b are unchanged.

But functions that change the values of their arguments are useful! We won't give them up without a fight.

Actually, what is needed is to be able to change the value of

variables used in the caller (even if some related

variables

become the arguments) and that distinction is the key.

Just because we want to swap the values of a

and b, doesn't mean the arguments have to be literally

a and b.

void swap(int *px, int *py) {

int temp;

temp = *px;

*px = *py;

*py = temp;

}

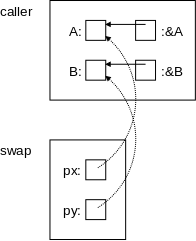

The program on the right has two parameters px and py each of which is a pointer to an integer (*px and *py are the integers). Since C is a call-by-value language, changes to the parameters, which are the pointers px and py would not result in changes to the corresponding arguments. But the program on the right doesn't change the pointers at all, instead it changes the values they point to.

Since the parameters are pointers to integers, so must be the

arguments.

A typical call to this function would be

int A=10,B=20; swap(&A,&B);

It is crucial to understand, how this call results in A becoming 20, the value previously in B, and B becoming 10, the value previously in A.

On the right is a pictorial explanation.

A has a certain address.

&A equals

that address (more precisely the

rvalue of &A = the lvalue of A).

Similarly for py, &B, and B.

These are shown by the solid arrows in the diagram.

The call swap(&A,&B) copies (the rvalue of) &A into (the rvalue of) the first parameter, which is px. Similarly for &B and the second parameter, py. These are shown by the dotted arrows. Thus the value of px is the address of A, which is indicated by the arrow. Again, to be pedantic, the rvalue of px equals the rvalue of &A, which equals the lvalue of A. Similarly for py, &B, and B.

Swapping px with py would change the dotted arrows, but would not change anything in the caller. However, we don't swap px with py; instead we swap *px with *py. That is, we dereference the pointers and swap the things pointed to! This subtlety is the key to understanding the effect of many C functions. It is crucial.

Homework: Write rotate3(A,B,C) that sets A to the old value of B, sets B to old C, and C to old A.

Homework: Write plusminus(x,y) that sets x to old x + old y and sets y to old x - old y.

Start Lecture #05

#include <stdio.h> #define BUFSIZE 100 char buf[BUFSIZE]; int bufp = 0; int getch(void); void ungetch(int); int getint(int *pn);

int getch(void) { return (bufp>0) ? buf[--bufp] : getchar(); }

void ungetch(int c) { if (bufp >= BUFSIZE) printf("ungetch: too many chars\n"); else buf[bufp++] = c; }

#include <stdio.h> #include <ctype.h> int getint(int *pn) { int c, sign; while (isspace(c=getch())) ; if (!isdigit(c) && c!=EOF && c!='+' && c!='-') { ungetch(c); return 0; } sign = (c=='-') ? -1 : 1; if (c=='+' || c=='-') c = getch(); for (*pn = 0; isdigit(c); c=getch()) *pn = 10 * *pn + (c-'0'); *pn *= sign; if (c != EOF) ungetch(c); return c; }

The program pair getch() and ungetch() generalize getchar() by supporting the notion of unreading a character, i.e., having the effect of pushing back several already read characters.

Note that ungetch() is careful not to exceed the size of the buffer used to stored the pushed back characters. Remember that the C compiler does not generate run-time checks that prevent you from accessing an array beyond its bound. As mentioned previously, a number of break ins had been enabled by the lack of such checks in library programs.

Also shown is getint(), which reads an integer from standard input (stdin) using getch() and ungetch().

getint() returns the integer read via a parameter. As we have seen the new value of a parameter is not passed back to the caller. Hence, getint() uses the pointer/address business we just saw with swap().

Specifically any change made to pn by getint() would be invisible to the caller. However, getint() changes *pn; a change the caller does see.

The value returned by the function itself is the status: zero means the next characters do not form an integer, EOF (which is negative) means we are at the end of file, positive means an integer has been found.

Briefly the program works as follows.

Skip blanks

Check for legality

Determine sign

Evaluate number

one digit at a time

Although short, the program is not trivial. Indeed, there are some details to note.

123(no newline at the end), it will set *pn=123 as desired but will return EOF. I suspect that most programs using getint() will, in this case, ignore *pn and just treat it as EOF.

If you were asked to produce a getint() function you would have three tasks.

The third is clearly the easiest task. I suspect that the first is the hardest.

Homework: 5-1. As written, getint() treats a + or - not followed by a digit as a valid representation of zero. Fix it to push such a character back on the input.

> Hi, > > Many students have submitted CIMS acount requests because they are > enrolled in UA.0201-005. This is just a heads up that I am rejecting > all of these in the request system since the class accounts should be > made directly by the systems staff with use of the class roster. This > is Allan Gottlieb's class so I am copying in case he hasn't yet formally > requested class accounts for all his students. > > Best, > Stephanie Hi Stephanie, Thanks for the heads up. Indeed, Allan has requested class accounts for his students in this course, and they have been created by us based on the roster. Allan, in case you don't have it, you may point any student who is unsure of their account status to this link, where they may view their current status and reset their password if desired: https://cims.nyu.edu/webapps/password/reset Thanks, Aric

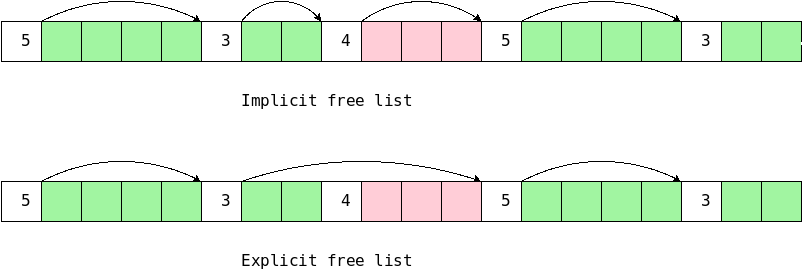

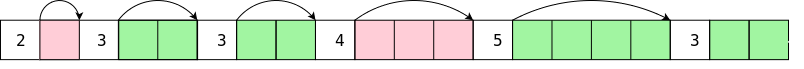

In C pointers and arrays are closely related. As the book says

Any operation that can be achieved by array subscripting can also be done with pointers.

The authors go on to say

The pointer version will in general be faster but, at least to the uninitiated, somewhat harder to understand.

The second clause is doubtless correct; but perhaps not the first. Remember that the 2e was written in 1988 (1e in 1978). Compilers have improved considerably in the past 30+ years and, I suspect, would turn out nearly as fast code for many of the array versions.

The next few sections present some simple examples using pointers.

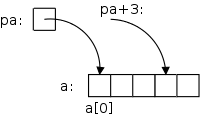

int a[5], *pa; pa = &a[0];

int x = *pa; x = *(pa+1);

x = a[0]; x = *a;

int i; x = a[i]; x = *(a+i);

On the far right we see some code involving pointers and arrays. After the first two lines are executed we get the diagram shown on the near right. pa is a pointer to the first element of the array a. Remember that, as in Java, the first element of a C array a is a[0]. Similarly, pa+3 is a pointer to the fourth element of the array.

But note that pa+3 is just an expression and not a container (no lvalue): you can't put another pointer into pa+3 just like you can't put another integer into i+3.

The next line sets x (which is a container) equal to (the rvalue of) a[0]; the line after that sets x=a[1].

Then we explicitly set x=a[0].

The line after that has the same effect! That is because in C the value of array name equals the address of its first element. (The rvalue of a = the rvalue of &a[0] = the address of a[0] = the lvalue of a[0].) Again note that a (i.e., &a[0]) is an expression, not a variable, and hence is not a container.

Said yet another way a and pa have the same value

(rvalue) but are not the same thing

!

Similarly, the last two lines each have the same effect, this time for a general element of the array a[i].

int a[5], *pa; pa = &a[0]; pa = a; a = pa; // illegal &a[0] = pa; // illegal

Both pa and a are pointers to ints. In particular a is defined to be &a[0]. Although pa and a have much in common, there is an important difference: pa is a variable, its value can be changed; whereas &a[0] (and hence a) is an expression and not a variable. In particular the last two lines on the right are illegal.

Another way to say this is that &a[0] is not an lvalue.

This is similar to the legality of x=y+5;

versus the

illegality of y+5=x;

int mystrlen(char *s) {

int n;

for (n=0; *s!='\0'; s++,n++) ;

return n;

}

The code on the right illustrates how well C pointers, arrays, and strings work together. What a tiny program to find the length of an arbitrary string!

Note that the body of the for loop is null; all the work is done in the for statement itself.

char str[50], *pc;

// calculate str and pc

mystrlen(pc);

mystrlen(str);

mystrlen("Hello, world.");

Note the various ways in which mystrlen() can be called.

decoratea variable with enough stuff to obtain one of the primitive types.

#include <stdio.h>

int x, *p;

int main () {

p = &x;

x = 12;

printf("p = %p\n", p);

printf("*p = %d\n", *p);

p++;

printf("p = %p\n", p);

printf("*p = %d\n", *p);

}

The example on the right below illustrates well the difference between a variable, in this case x, and its address &x. The first value printed is the address of x. This is not 12. Instead, it is some (probably large) number that happens to be the address of x.

In fact when run on my laptop the program produced the following output.

p = 0x7fc41fc78040 *p = 12 p = 0x7fc41fc78044 *p = 0

Let's go over this 7-line main() function line by line.

next integer after xis printed. But there is no integer after x. Hence the program is erroneous! Its output in unpredictable!

Note: Incrementing p does not increment x. Instead, the result is that p points to the next integer after x. In this program there is no further integer after x, so the result is unpredictable and the program is erroneous. Specifically, the value of *p is now unpredictable. On my system the value of *p was 0, but that can NOT be counted on. If, instead of pointing to x, we had p point to A[7] for some large int array A, then the last line would have printed the value of A[8] and the penultimate line would have printed the address of A[8].

Remarks:

#include <stdio.h>

int mystrlen (char *s);

int main () {

char stg[] = "hello";

printf ("The string %s has %d characters\n",

stg, mystrlen(stg));

}

int mystrlen (char s[]) {

int i;

for (i = 0; s[i] != '\0'; i++) ;

return i;

}

int mystrlen (char *s) {

int i = 0;

while (*s++ != '\0')

i++;

return i;

}

On the right we show two versions of a string length function. The first version uses array notation for the string; the second uses pointer notation. The main() program is identical for the two versions so is shown only once.

Note how very close the two string length functions are. This is another illustration of the similarity of arrays and pointers in C.

Note the two declarations

int mystrlen (char *s); int mystrlen (char s[]);

They are used 3 times in the code on the right. In C these two declarations are equivalent. Changing any or all of them to the other form does not change the meaning of the program.

I realize an array does not at first seem the same as a pointer. Remember that the array name itself is equal to a pointer to the first element of the array. Hence declaring

float a[5], *b;

results in a and b having the same type (pointer to float). But the array a has additionally been defined; that is, space for 5 floats has been allocated. Hence a[3] = 5; is legal. b[3] = 5 is syntactically legal, but may be semantically invalid and abort at runtime, unless b has previously be set to point to sufficient space.

In the pointer version of mystrlen() we encounter a common C idiom *s++. First note that the precedence of the operators is such that *s++ means *(s++). That is, we are moving (incrementing) the pointer and examining what it used to point at. We are not incrementing a part of the string. Specifically, we are not executing (*s)++;

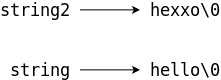

void changeltox (char *s) {

while (*s != '\0') {

if (*s == 'l')

*s = 'x';

s++;

}

}

The program on the right loops through the input string and replaces each occurence of l with x.

The while loop and increment of s could have been combined into a for loop.

This version is written in pointer style.

Homework: Rewrite changeltox() to use array style and a for loop.

void mystrcpy (char *t, char *s) {

while ((*t++ = *s++) != '\0') ;

}

Check out the ONE-liner on the right. Note especially the use of standard idioms for marching through strings and for finding the end of the string.

Slick, very slick!

Even slicker is to note that '\0' has value 0 and testing != 0 is

just testing so the while statement is equivalent to

while (*t++ = *s++);

But the program is scary, very scary!

Question: Why is it scary?

Answer: Because there is no length check.

If the character array t (or equivalently the block of characters t points to) is smaller than the character array s, then the copy will overwrite whatever happens to be located right after the array t.

The lack of such length checks has permitted a number of security breaches.

double f(int *a); double f(int a[]);

The two lines on the right are equivalent when used as a function declaration (or, with the semicolon replaced by a {, as the head line of a function definition). The authors say they prefer the first. For me it is not so clear cut. In mystrlen() above I would indeed prefer char *s as written since I think of a string as a block of chars with a pointer to the beginning.

double dotprod(double A[], double B[]);

However, if I were writing an inner product routine (a.k.a. dot product), I would prefer the array form as on the right since I think of dot product as operating on vectors.

But of course, more important than which one I prefer or the authors prefer, is the fact that they are equivalent in C.

Note: The definition

int a[10];

reserves space for 10 ints and no

pointers; whereas the definition

int *a;

reserves space for no ints and

1 pointer.

#include <stdio.h> void f(int *p);

int main() { int A[20]; // initialize all of A f(A+6); return 0; }

void f(int *p) { printf("legal? %d\n", p[-2]); printf("legal? %d\n", *(p-2)); }

In the code on the right, main() first declares an integer array A[] of size 20 and initializes all its members (how the initialization is done is not important). Then main(), in a effort to protect the beginning of A[], passes only part of the array to f(). Remembering that A+6 means (&A[0])+6, which is &A[6], we see that f() receives a pointer to the 7th element of the array A.

The author of main() mistakenly believed that A[0],..,A[5] are hidden from f(). Let's hope this author is not on the security team for the board of elections.

Since C uses call by value, we know that f() cannot change the value of the pointer A+6 in main(). But f() can use its copy of this pointer to reference or change all the values of A, including those before A[6]. On the right, f() successfully references A[4].

It naturally would be illegal for f() to reference (or worse change) p[-9].

Start Lecture #06

A important point is that, given the declaration int *pa; the increment pa+=3 does not simply add three to the address stored in pa. Instead, it increments pa so that it points 3 integers further forward (since pa is a pointer to an integer). If pc is a pointer to a double, then pc+3 increments pc so that it points 3 doubles forward.

#include <stdio.h>

void main (void) {

int q[] = {11, 13, 15, 19};

int *p = q; // initializes p NOT *p

printf("*p = %d\n", *p);

printf("*p++ = %d\n", *p++);

printf("*p = %d\n", *p);

printf("*++p = %d\n", *++p);

printf("*p = %d\n", *p);

printf("++*p = %d\n", ++*p);

}

To better understand pointers, arrays, ++, and *, let's go over the code on the right line by line. For reference the precedence table is here. The output produced is

*p = 11 *p++ = 11 *p = 13 *++p = 15 *p = 15 ++*p = 16

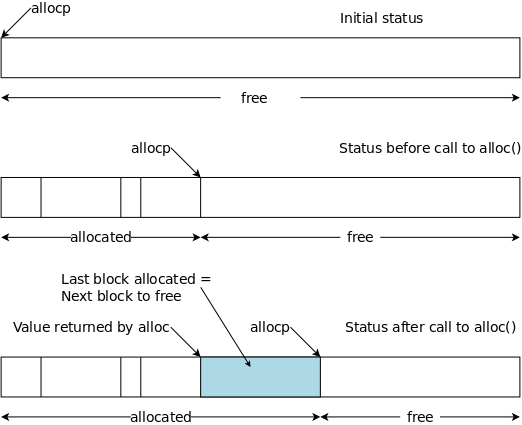

#define ALLOCSIZE 15000 static char allocbuf[ALLOCSIZE]; static char *allocp = allocbuf;

char *alloc(int n) { if (allocp+n ≤ allocbuf+ALLOCSIZE) { allocp += n; return allocp-n; // previous value } else // not enough space return 0; }

void afree (char *p) { if (p>=allocbuf && p<allocbuf+ALLOCSIZE) allocp = p; }

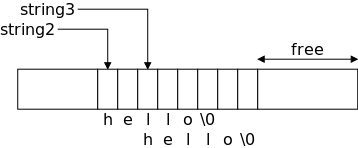

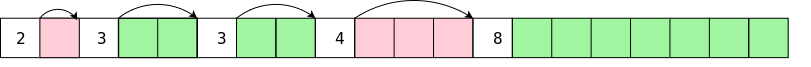

On the right is a primitive storage allocator and freer, alloc() and afree(). This pair of routines distributes and reclaims memory from a buffer allocbuf. The internal pointer allocp points to the boundary between already allocated memory (on the left of allocp in the diagrams) and memory still available for allocation (on the right).

The top picture shows the initial state: nothing is allocated; everything is free.

Looking at the middle (before) diagram we see four blocks that have been allocated and a large free region on the right. The routines alloc() and afree control the internal pointer allocp.

When alloc(n) is called, with a non-negative integer argument, it returns a pointer to a block of n characters and then moves allocp to the right, indicating that these n characters are no longer available.

When afree(p) is called with the pointer returned by alloc(), it resets the state of alloc()/afree() to what it was before the call to alloc().

A very strong assumption is being made that calls

to alloc()/afree() are executed in a stack-like manner, i.e.,

the routines assume that a block being freed is

the

These routines would be useful for managing storage for C automatic, local variables. They are far from general. The standard library routines malloc()/free() do not make this assumption and as a result are considerably more complicated.

Since pointers, not array positions are communicated to users of alloc()/afree(), these users do not need to know the name of the array, which is kept under the covers via static.

Notes:

no object. Although a literal 0 is permitted; most programmers use NULL.

Homework: What is wrong with the following calls to alloc() and afree()? Assume that ALLOCSIZE is big enough.

char *p1, *p2, *p3; p1 = alloc(10); p2 = alloc(20); p3 = alloc(15); afree(p3); afree(p1); afree(p2);