Object Category Recognition

Rob Fergus1, Pietro Perona2, Andrew Zisserman1

1 - Dept. of Engineering Science, Parks Road, Oxford, OX1 3PJ, U.K.

2 - Dept .of Electrical Engineering, California Institute of Technology, MS 136-93, Pasadena, CA 91125, USA.

Overview

The goal of our research is to try and get computers to recognize different categories of object in images. To do this, the computer must be capable of learning a new category looks like, in order that we may then identify new instances in a query image.

Why is it hard?

Below are three pictures with motorbikes in. Not only do the motorbikes all look different, but some of the images have cluttered backgrounds; some have occluded parts; others have scale and lighting variations. Our approach must be able to deal with these factors in order to model the category reliably. Additionally, we would like to be able to learn that these images contain motorbikes with a minimum of supervision, that is we don't want to have to segment the object out from background or manually identify potentially useful features on the object.

|

|

|

So how do we do it?

There are three main issues we need to consider:

1. Representation - How do we represent the object

2. Learning - Using this representation, how do we learn a particular object category

3. Recognition - How do we use the model we have learnt to find further instances in query images.

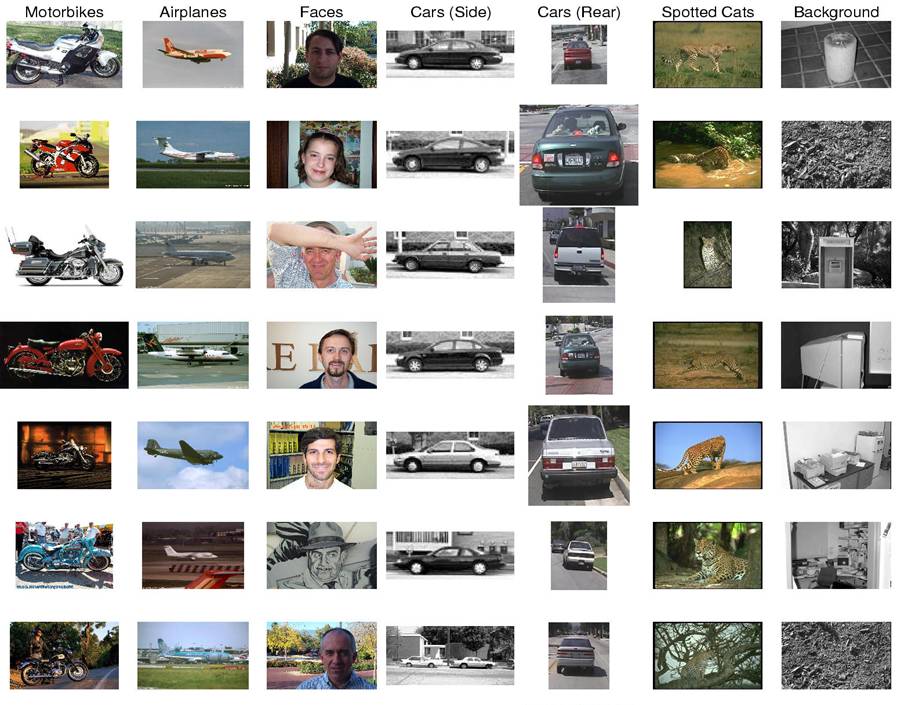

We test our approach on a variety of object categories, including motorbikes, airplanes, faces, cars and spotted cats. Below you can see some samples from them. You can download them from here.

For each dataset, we randomly split it into two halves. We trained on the first half and tested on the second. In testing we were detecting the presence/absence of the object class with the query image. We used a set of background images (i.e. ones that contained random assorted scenes) to be our negative test set. Click on the images below to look at the models learnt for each of the different datasets.

|

|

|

|

|

| Motorbikes | Airplanes | Faces | Spotted cats | Cars from rear |

We plotted ROC curves between using the testing half of each dataset and the background images for each dataset and recorded the point of equal error, that is, when the (probability of a false alarm) = (1 - probability of detection). In the table below we summarize the results of three different experiments:

1. All images we scale normalized, so the object was the same size in each. All system settings were kept the same across all datasets.

2. All images we scale normalized, so the object was the same size in each. All system settings were kept the same across all datasets, except that the scale over which regions were found was tuned on a per-dataset basis.

3. Images weren't scale normalized, instead the model was made scale invariant. Region scale set automatically depending on the size range of the images.

The values quoted in the table are equal error rates (%), so for experiment 1, 7.5% of the 400 motorbike test images we misclassified (thought NOT to contain a motorbike), i.e. 30 images. Similarly, 30 background images we classified as containing a motorbike (out of 400).

| Dataset | Total size of dataset | 1. Scale normalized - all parameters the same | 2.Scale normalized - varying of feature scale | 3. Scale-invariant |

| Motorbikes | 800 | 7.5 | 5.0 | 6.7 |

| Airplanes | 800 | 9.8 | 6.0 | 7.0 |

| Faces | 435 | 3.6 | 3.2 | - |

| Spotted Cats | 200 | 10.0 | 10.0 | - |

| Cars (Rear) | 800 | - | - | 9.7 |

For more details, please see our paper.

Discussion & Future work

While the results of our approach are encouraging: the same approach works well across 5 diverse datasets, there are many shortcomings:

1. The object must be sufficiently large within the image to be decomposable into parts.

2. We currently only model a fixed viewpoint of the object. Many object vary greatly in appearance depending on viewpoint, e.g. cars from the side or rear. We could use a mixture model to handle multiple views.

3. We are very reliant upon the saliency operator picking up regions on the object. If it doesn't fire then the object is often missed. Several options are open: (a) Increase the number of regions per image drastically; (b) Use different types of feature to capture different properties of the object (e.g. curves to model the outline of the object).

Acknowledgements

This work is part of a collaboration between the California Institute of Technology and the University of Oxford. Funding was provided by the UK EPSRC; Caltech Center for Neuromorphic Systems Engineering and EC CogViSys project. Many thanks to Timor Kadir for advice on the region detection and D. Roth for providing the Cars (Side) dataset.

Source code

I have code available for distribution for both the CVPR'03 paper "Object class recognition by unsupervised scale-invariant learning" and also the CVPR'05 paper "A sparse object category model for efficient learning and exhaustive recognition". The CVPR'03 code is Linux only. The CVPR'05 code works under both Windows and Linux and uses the VXL libraries. Please email me if you want the code.

Links

- Visual Geometry Group, University of Oxford, UK.

- Vision group, California Institute of Technology.

References

Weakly Supervised Scale-Invariant Learning of Models for Visual Recognition. Fergus, R. , Perona, P. and Zisserman, A. International Journal of Computer Vision, in print, 2006 [PDF]

Object Class Recognition by Unsupervised Scale-Invariant Learning. Fergus, R. , Perona, P. and Zisserman, A. Proc. of the IEEE Conf on Computer Vision and Pattern Recognition 2003. [PDF]

A Sparse Object Category

Model for Efficient Learning and Exhaustive Recognition. Fergus, R. , Perona, P. and Zisserman,

A. Proc. of the IEEE Conf on Computer Vision and Pattern Recognition 2005.

[PDF]